EAA-Net: Rethinking the Autoencoder Architecture with Intra-class Features for Medical Image Segmentation

Paper and Code

Aug 19, 2022

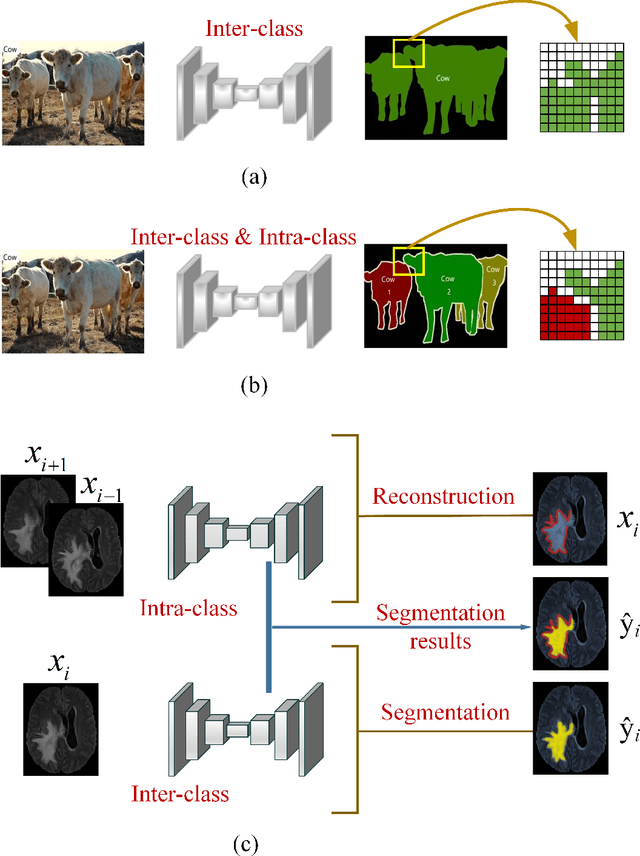

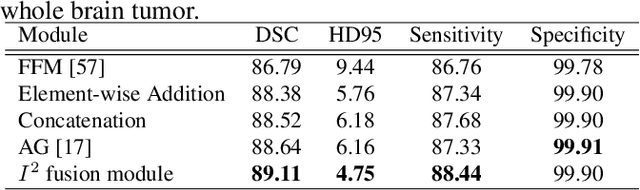

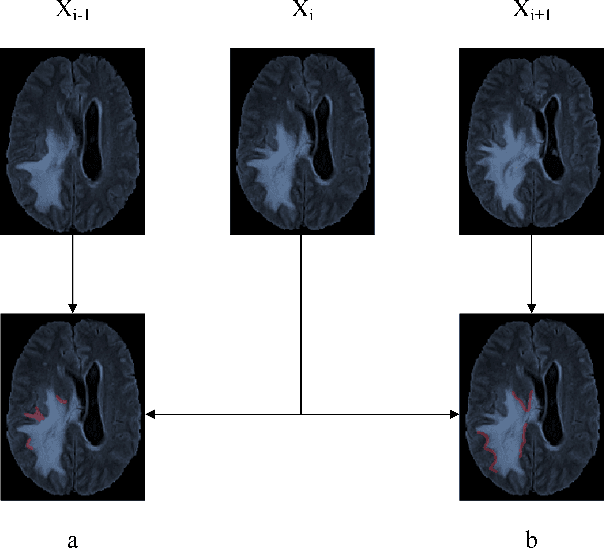

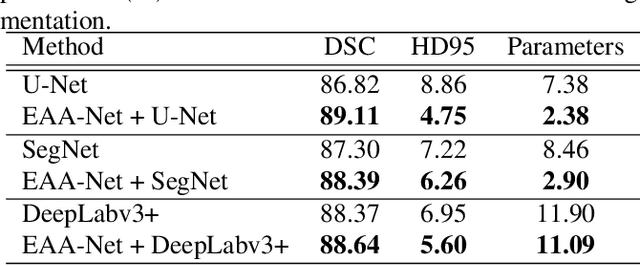

Automatic image segmentation technology is critical to the visual analysis. The autoencoder architecture has satisfying performance in various image segmentation tasks. However, autoencoders based on convolutional neural networks (CNN) seem to encounter a bottleneck in improving the accuracy of semantic segmentation. Increasing the inter-class distance between foreground and background is an inherent characteristic of the segmentation network. However, segmentation networks pay too much attention to the main visual difference between foreground and background, and ignores the detailed edge information, which leads to a reduction in the accuracy of edge segmentation. In this paper, we propose a light-weight end-to-end segmentation framework based on multi-task learning, termed Edge Attention autoencoder Network (EAA-Net), to improve edge segmentation ability. Our approach not only utilizes the segmentation network to obtain inter-class features, but also applies the reconstruction network to extract intra-class features among the foregrounds. We further design a intra-class and inter-class features fusion module -- I2 fusion module. The I2 fusion module is used to merge intra-class and inter-class features, and use a soft attention mechanism to remove invalid background information. Experimental results show that our method performs well in medical image segmentation tasks. EAA-Net is easy to implement and has small calculation cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge