Dynamic Traffic Scene Classification with Space-Time Coherence

Paper and Code

May 29, 2019

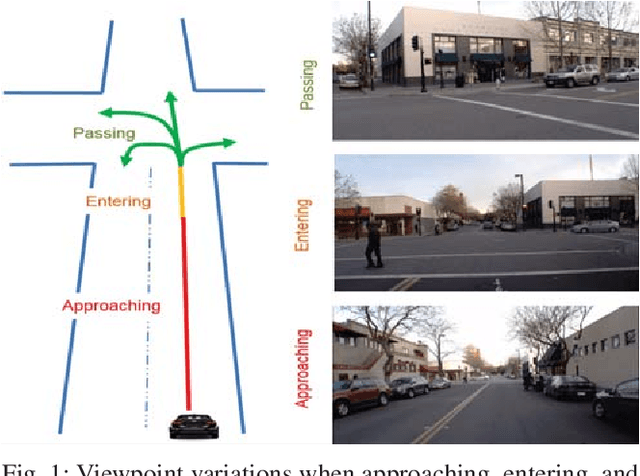

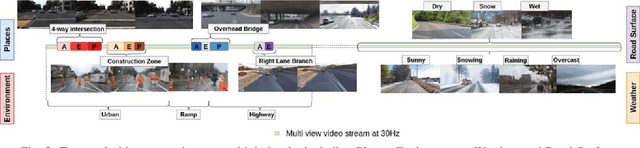

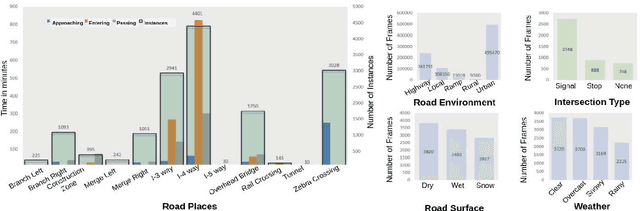

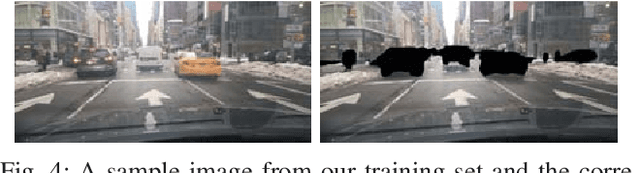

This paper examines the problem of dynamic traffic scene classification under space-time variations in viewpoint that arise from video captured on-board a moving vehicle. Solutions to this problem are important for realization of effective driving assistance technologies required to interpret or predict road user behavior. Currently, dynamic traffic scene classification has not been adequately addressed due to a lack of benchmark datasets that consider spatiotemporal evolution of traffic scenes resulting from a vehicle's ego-motion. This paper has three main contributions. First, an annotated dataset is released to enable dynamic scene classification that includes 80 hours of diverse high quality driving video data clips collected in the San Francisco Bay area. The dataset includes temporal annotations for road places, road types, weather, and road surface conditions. Second, we introduce novel and baseline algorithms that utilize semantic context and temporal nature of the dataset for dynamic classification of road scenes. Finally, we showcase algorithms and experimental results that highlight how extracted features from scene classification serve as strong priors and help with tactical driver behavior understanding. The results show significant improvement from previously reported driving behavior detection baselines in the literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge