Dynamic Prompt Middleware: Contextual Prompt Refinement Controls for Comprehension Tasks

Paper and Code

Dec 03, 2024

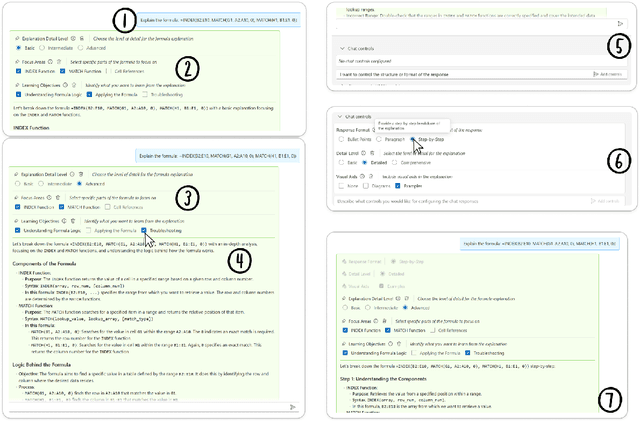

Effective prompting of generative AI is challenging for many users, particularly in expressing context for comprehension tasks such as explaining spreadsheet formulas, Python code, and text passages. Prompt middleware aims to address this barrier by assisting in prompt construction, but barriers remain for users in expressing adequate control so that they can receive AI-responses that match their preferences. We conduct a formative survey (n=38) investigating user needs for control over AI-generated explanations in comprehension tasks, which uncovers a trade-off between standardized but predictable support for prompting, and adaptive but unpredictable support tailored to the user and task. To explore this trade-off, we implement two prompt middleware approaches: Dynamic Prompt Refinement Control (Dynamic PRC) and Static Prompt Refinement Control (Static PRC). The Dynamic PRC approach generates context-specific UI elements that provide prompt refinements based on the user's prompt and user needs from the AI, while the Static PRC approach offers a preset list of generally applicable refinements. We evaluate these two approaches with a controlled user study (n=16) to assess the impact of these approaches on user control of AI responses for crafting better explanations. Results show a preference for the Dynamic PRC approach as it afforded more control, lowered barriers to providing context, and encouraged exploration and reflection of the tasks, but that reasoning about the effects of different generated controls on the final output remains challenging. Drawing on participant feedback, we discuss design implications for future Dynamic PRC systems that enhance user control of AI responses. Our findings suggest that dynamic prompt middleware can improve the user experience of generative AI workflows by affording greater control and guide users to a better AI response.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge