Dynamic Heterogeneous Federated Learning with Multi-Level Prototypes

Paper and Code

Dec 15, 2023

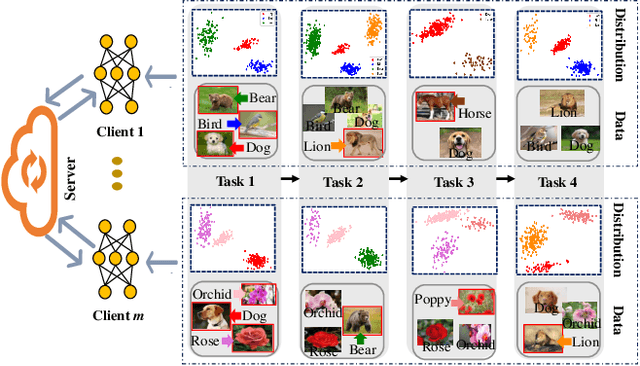

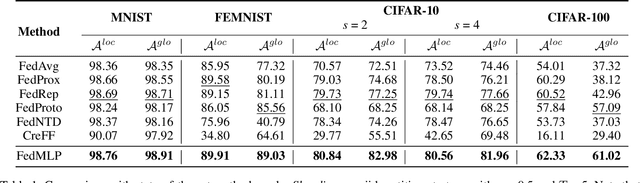

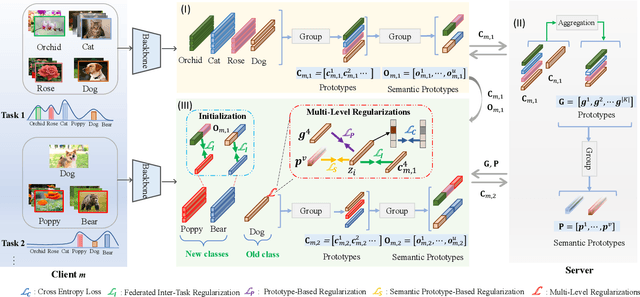

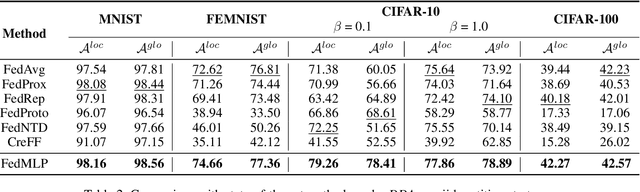

Federated learning shows promise as a privacy-preserving collaborative learning technique. Existing heterogeneous federated learning mainly focuses on skewing the label distribution across clients. However, most approaches suffer from catastrophic forgetting and concept drift, mainly when the global distribution of all classes is extremely unbalanced and the data distribution of the client dynamically evolves over time. In this paper, we study the new task, i.e., Dynamic Heterogeneous Federated Learning (DHFL), which addresses the practical scenario where heterogeneous data distributions exist among different clients and dynamic tasks within the client. Accordingly, we propose a novel federated learning framework named Federated Multi-Level Prototypes (FedMLP) and design federated multi-level regularizations. To mitigate concept drift, we construct prototypes and semantic prototypes to provide fruitful generalization knowledge and ensure the continuity of prototype spaces. To maintain the model stability and consistency of convergence, three regularizations are introduced as training losses, i.e., prototype-based regularization, semantic prototype-based regularization, and federated inter-task regularization. Extensive experiments show that the proposed method achieves state-of-the-art performance in various settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge