Dynamic Attention-based Communication-Efficient Federated Learning

Paper and Code

Aug 12, 2021

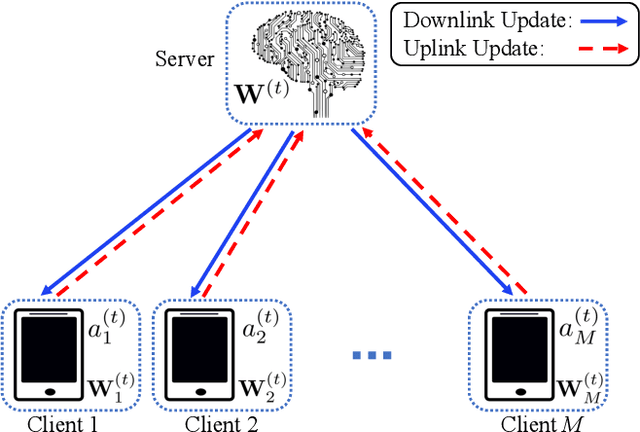

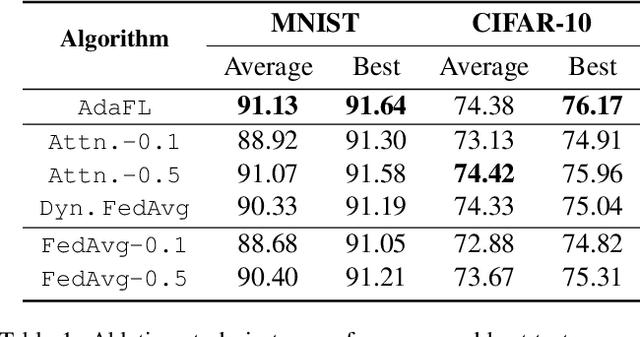

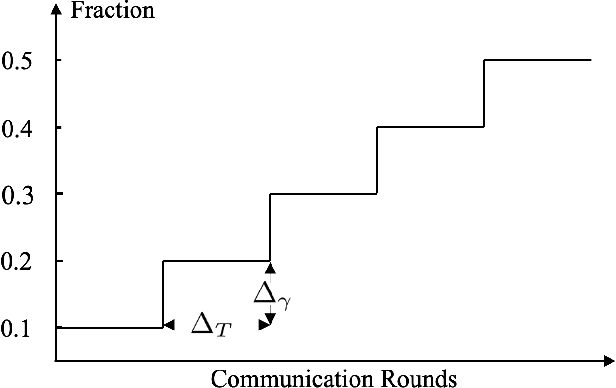

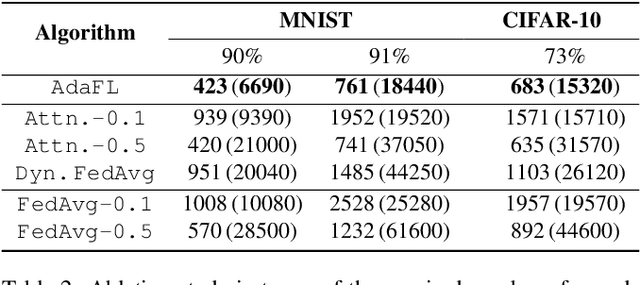

Federated learning (FL) offers a solution to train a global machine learning model while still maintaining data privacy, without needing access to data stored locally at the clients. However, FL suffers performance degradation when client data distribution is non-IID, and a longer training duration to combat this degradation may not necessarily be feasible due to communication limitations. To address this challenge, we propose a new adaptive training algorithm $\texttt{AdaFL}$, which comprises two components: (i) an attention-based client selection mechanism for a fairer training scheme among the clients; and (ii) a dynamic fraction method to balance the trade-off between performance stability and communication efficiency. Experimental results show that our $\texttt{AdaFL}$ algorithm outperforms the usual $\texttt{FedAvg}$ algorithm, and can be incorporated to further improve various state-of-the-art FL algorithms, with respect to three aspects: model accuracy, performance stability, and communication efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge