DSORT-MCU: Detecting Small Objects in Real-Time on Microcontroller Units

Paper and Code

Oct 22, 2024

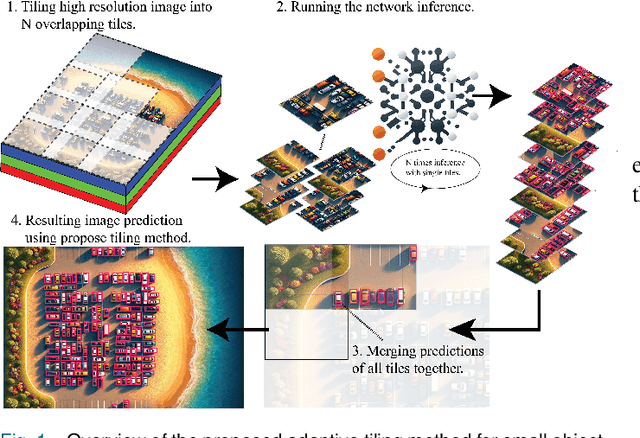

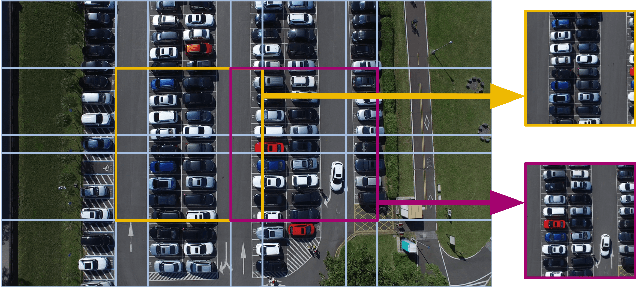

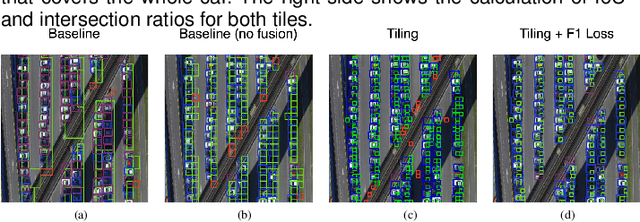

Advances in lightweight neural networks have revolutionized computer vision in a broad range of IoT applications, encompassing remote monitoring and process automation. However, the detection of small objects, which is crucial for many of these applications, remains an underexplored area in current computer vision research, particularly for low-power embedded devices that host resource-constrained processors. To address said gap, this paper proposes an adaptive tiling method for lightweight and energy-efficient object detection networks, including YOLO-based models and the popular FOMO network. The proposed tiling enables object detection on low-power MCUs with no compromise on accuracy compared to large-scale detection models. The benefit of the proposed method is demonstrated by applying it to FOMO and TinyissimoYOLO networks on a novel RISC-V-based MCU with built-in ML accelerators. Extensive experimental results show that the proposed tiling method boosts the F1-score by up to 225% for both FOMO and TinyissimoYOLO networks while reducing the average object count error by up to 76% with FOMO and up to 89% for TinyissimoYOLO. Furthermore, the findings of this work indicate that using a soft F1 loss over the popular binary cross-entropy loss can serve as an implicit non-maximum suppression for the FOMO network. To evaluate the real-world performance, the networks are deployed on the RISC-V based GAP9 microcontroller from GreenWaves Technologies, showcasing the proposed method's ability to strike a balance between detection performance ($58% - 95%$ F1 score), low latency (0.6 ms/Inference - 16.2 ms/Inference}), and energy efficiency (31 uJ/Inference} - 1.27 mJ/Inference) while performing multiple predictions using high-resolution images on a MCU.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge