Dsfer-Net: A Deep Supervision and Feature Retrieval Network for Bitemporal Change Detection Using Modern Hopfield Networks

Paper and Code

Apr 03, 2023

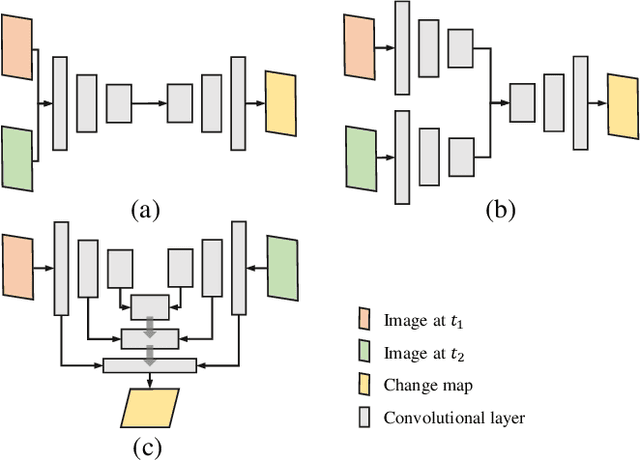

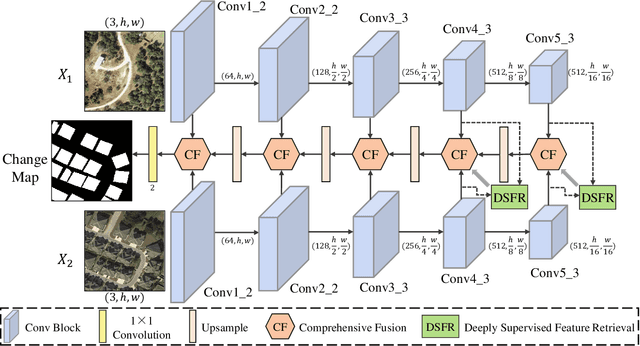

Change detection, as an important application for high-resolution remote sensing images, aims to monitor and analyze changes in the land surface over time. With the rapid growth in the quantity of high-resolution remote sensing data and the complexity of texture features, a number of quantitative deep learning-based methods have been proposed. Although these methods outperform traditional change detection methods by extracting deep features and combining spatial-temporal information, reasonable explanations about how deep features work on improving the detection performance are still lacking. In our investigations, we find that modern Hopfield network layers achieve considerable performance in semantic understandings. In this paper, we propose a Deep Supervision and FEature Retrieval network (Dsfer-Net) for bitemporal change detection. Specifically, the highly representative deep features of bitemporal images are jointly extracted through a fully convolutional Siamese network. Based on the sequential geo-information of the bitemporal images, we then design a feature retrieval module to retrieve the difference feature and leverage discriminative information in a deeply supervised manner. We also note that the deeply supervised feature retrieval module gives explainable proofs about the semantic understandings of the proposed network in its deep layers. Finally, this end-to-end network achieves a novel framework by aggregating the retrieved features and feature pairs from different layers. Experiments conducted on three public datasets (LEVIR-CD, WHU-CD, and CDD) confirm the superiority of the proposed Dsfer-Net over other state-of-the-art methods. Code will be available online (https://github.com/ShizhenChang/Dsfer-Net).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge