DSAM: A Distance Shrinking with Angular Marginalizing Loss for High Performance Vehicle Re-identificatio

Paper and Code

Nov 25, 2020

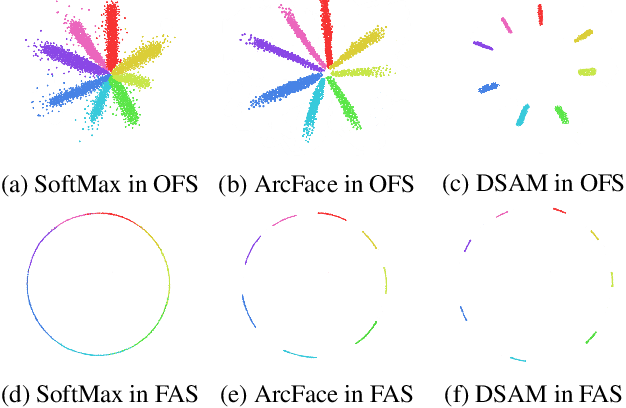

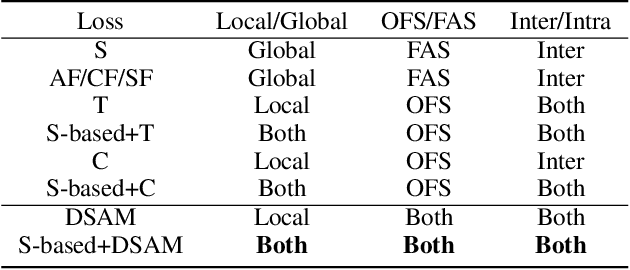

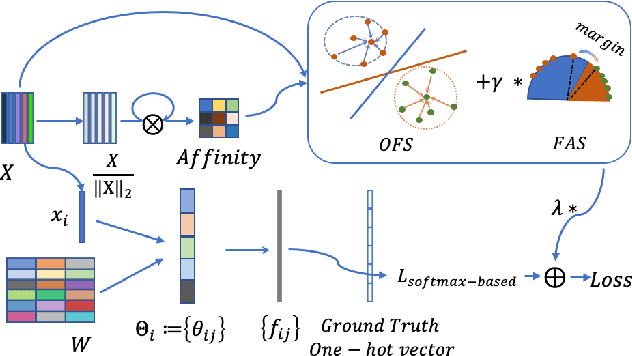

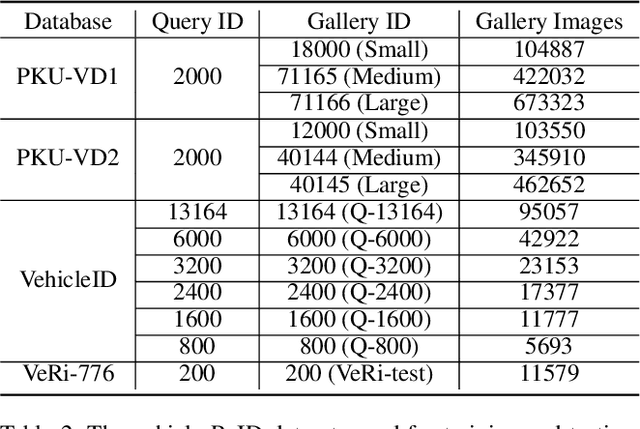

Vehicle Re-identification (ReID) is an important yet challenging problem in computer vision. Compared to other visual objects like faces and persons, vehicles simultaneously exhibit much larger intraclass viewpoint variations and interclass visual similarities, making most exiting loss functions designed for face recognition and person ReID unsuitable for vehicle ReID. To obtain a high-performance vehicle ReID model, we present a novel Distance Shrinking with Angular Marginalizing (DSAM) loss function to perform hybrid learning in both the Original Feature Space (OFS) and the Feature Angular Space (FAS) using the local verification and the global identification information. Specifically, it shrinks the distance between samples of the same class locally in the Original Feature Space while keeps samples of different classes far away in the Feature Angular Space. The shrinking and marginalizing operations are performed during each iteration of the training process and are suitable for different SoftMax based loss functions. We evaluate the DSAM loss function on three large vehicle ReID datasets with detailed analyses and extensive comparisons with many competing vehicle ReID methods. Experimental results show that our DSAM loss enhances the SoftMax loss by a large margin on the PKU-VD1-Large dataset: 10.41% for mAP, 5.29% for cmc1, and 4.60% for cmc5. Moreover, the mAP is increased by 9.34% on the PKU-VehicleID dataset and 8.73% on the VeRi-776 dataset. Source code will be released to facilitate further studies in this research direction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge