Driving Anomaly Detection Using Conditional Generative Adversarial Network

Paper and Code

Mar 15, 2022

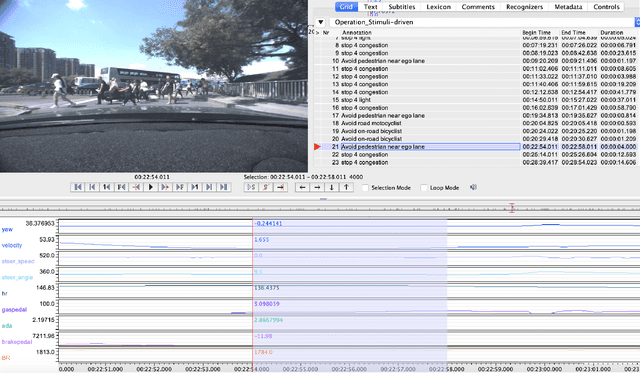

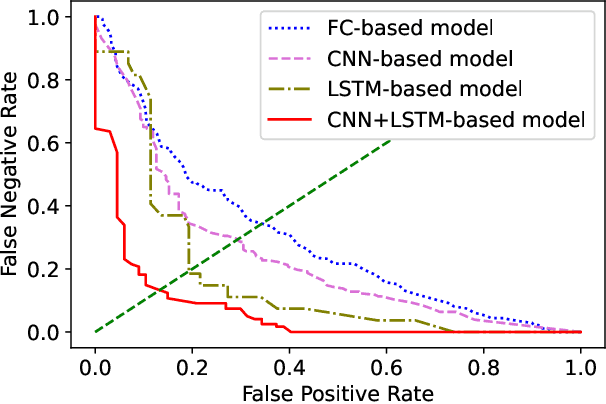

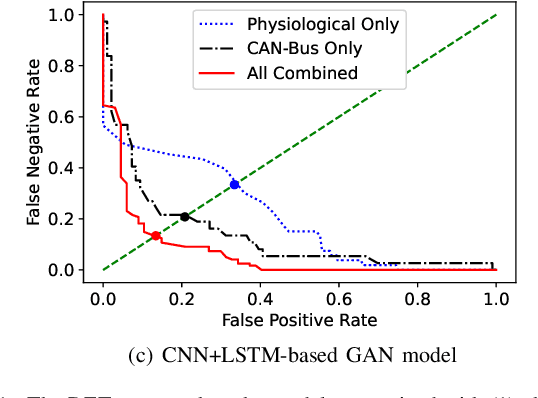

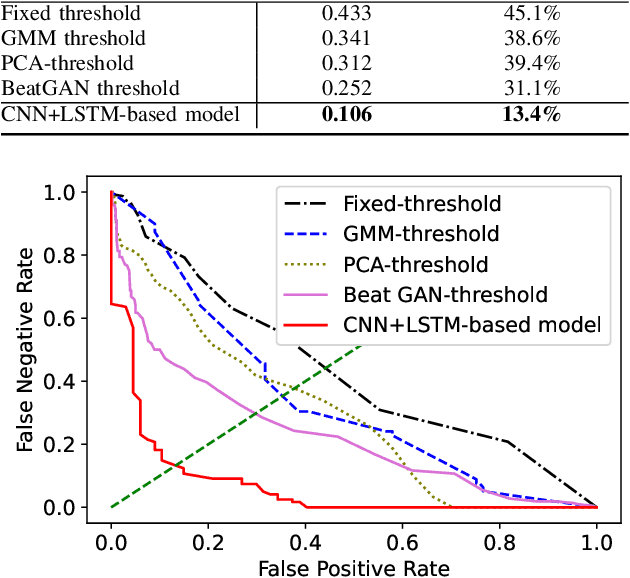

Anomaly driving detection is an important problem in advanced driver assistance systems (ADAS). It is important to identify potential hazard scenarios as early as possible to avoid potential accidents. This study proposes an unsupervised method to quantify driving anomalies using a conditional generative adversarial network (GAN). The approach predicts upcoming driving scenarios by conditioning the models on the previously observed signals. The system uses the difference of the output from the discriminator between the predicted and actual signals as a metric to quantify the anomaly degree of a driving segment. We take a driver-centric approach, considering physiological signals from the driver and controller area network-Bus (CAN-Bus) signals from the vehicle. The approach is implemented with convolutional neural networks (CNNs) to extract discriminative feature representations, and with long short-term memory (LSTM) cells to capture temporal information. The study is implemented and evaluated with the driving anomaly dataset (DAD), which includes 250 hours of naturalistic recordings manually annotated with driving events. The experimental results reveal that recordings annotated with events that are likely to be anomalous, such as avoiding on-road pedestrians and traffic rule violations, have higher anomaly scores than recordings without any event annotation. The results are validated with perceptual evaluations, where annotators are asked to assess the risk and familiarity of the videos detected with high anomaly scores. The results indicate that the driving segments with higher anomaly scores are more risky and less regularly seen on the road than other driving segments, validating the proposed unsupervised approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge