DPOAD: Differentially Private Outsourcing of Anomaly Detection through Iterative Sensitivity Learning

Paper and Code

Jun 27, 2022

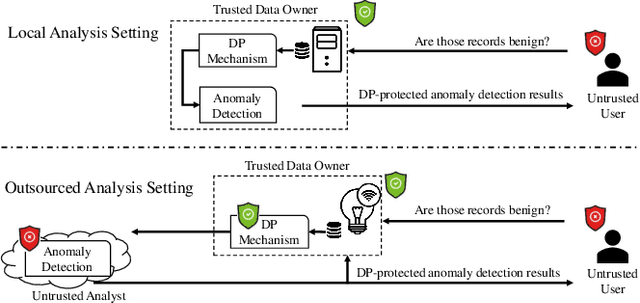

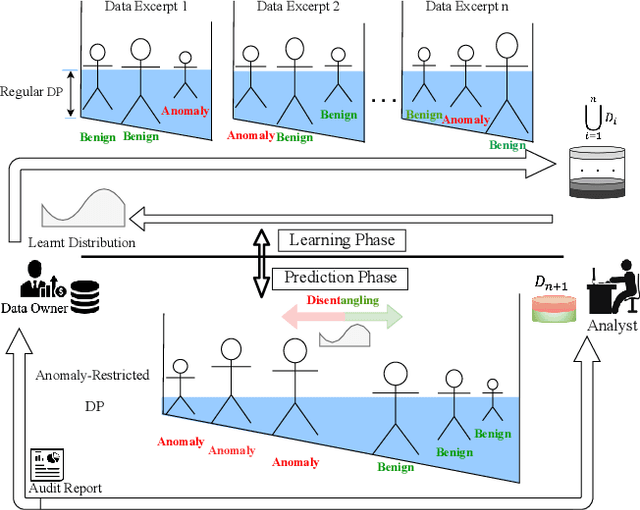

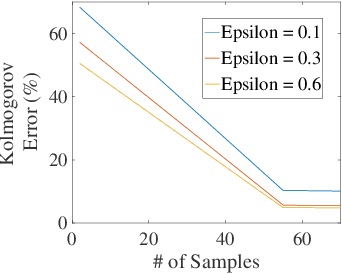

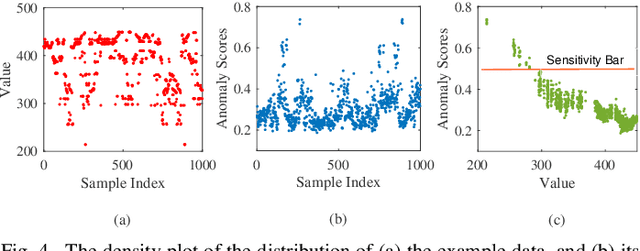

Outsourcing anomaly detection to third-parties can allow data owners to overcome resource constraints (e.g., in lightweight IoT devices), facilitate collaborative analysis (e.g., under distributed or multi-party scenarios), and benefit from lower costs and specialized expertise (e.g., of Managed Security Service Providers). Despite such benefits, a data owner may feel reluctant to outsource anomaly detection without sufficient privacy protection. To that end, most existing privacy solutions would face a novel challenge, i.e., preserving privacy usually requires the difference between data entries to be eliminated or reduced, whereas anomaly detection critically depends on that difference. Such a conflict is recently resolved under a local analysis setting with trusted analysts (where no outsourcing is involved) through moving the focus of differential privacy (DP) guarantee from "all" to only "benign" entries. In this paper, we observe that such an approach is not directly applicable to the outsourcing setting, because data owners do not know which entries are "benign" prior to outsourcing, and hence cannot selectively apply DP on data entries. Therefore, we propose a novel iterative solution for the data owner to gradually "disentangle" the anomalous entries from the benign ones such that the third-party analyst can produce accurate anomaly results with sufficient DP guarantee. We design and implement our Differentially Private Outsourcing of Anomaly Detection (DPOAD) framework, and demonstrate its benefits over baseline Laplace and PainFree mechanisms through experiments with real data from different application domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge