Does Fair Ranking Improve Minority Outcomes? Understanding the Interplay of Human and Algorithmic Biases in Online Hiring

Paper and Code

Dec 01, 2020

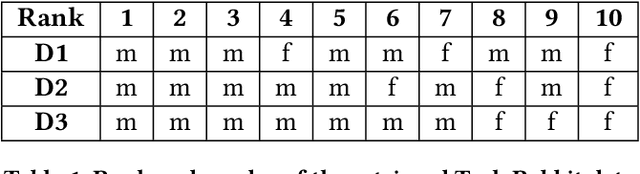

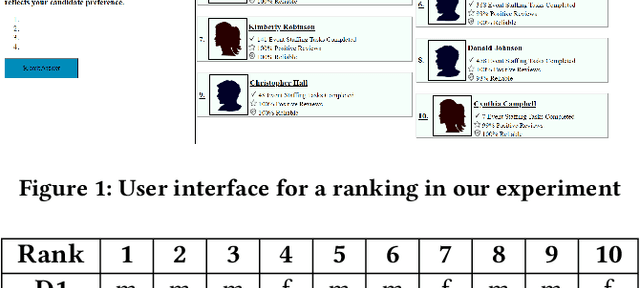

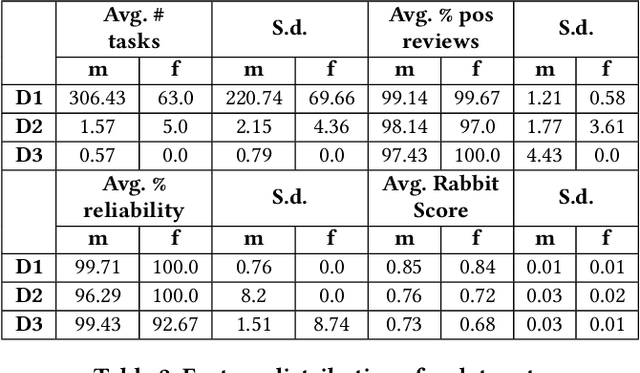

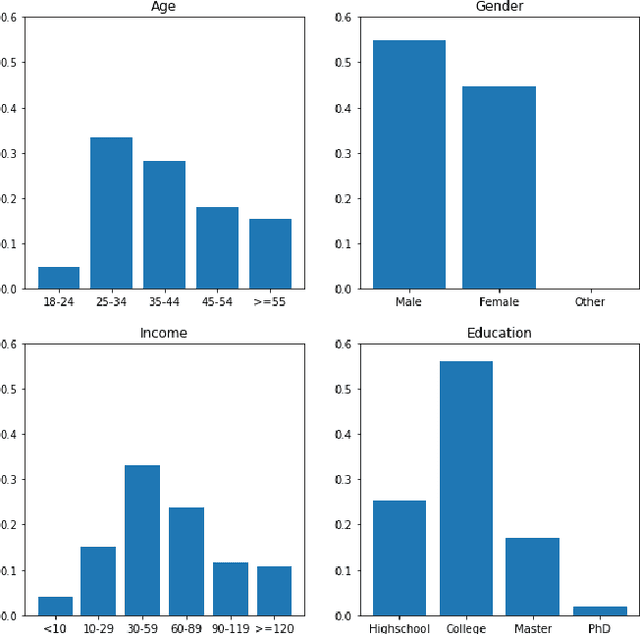

Ranking algorithms are being widely employed in various online hiring platforms including LinkedIn, TaskRabbit, and Fiverr. Since these platforms impact the livelihood of millions of people, it is important to ensure that the underlying algorithms are not adversely affecting minority groups. However, prior research has demonstrated that ranking algorithms employed by these platforms are prone to a variety of undesirable biases. To address this problem, fair ranking algorithms (e.g.,Det-Greedy) which increase exposure of underrepresented candidates have been proposed in recent literature. However, there is little to no work that explores if these proposed fair ranking algorithms actually improve real world outcomes (e.g., hiring decisions) for minority groups. Furthermore, there is no clear understanding as to how other factors (e.g., jobcontext, inherent biases of the employers) play a role in impacting the real world outcomes of minority groups. In this work, we study how gender biases manifest in online hiring platforms and how they impact real world hiring decisions. More specifically, we analyze various sources of gender biases including the nature of the ranking algorithm, the job context, and inherent biases of employers, and establish how these factors interact and affect real world hiring decisions. To this end, we experiment with three different ranking algorithms on three different job contexts using real world data from TaskRabbit. We simulate the hiring scenarios on TaskRabbit by carrying out a large-scale user study with Amazon Mechanical Turk. We then leverage the responses from this study to understand the effect of each of the aforementioned factors. Our results demonstrate that fair ranking algorithms can be an effective tool at increasing hiring of underrepresented gender candidates but induces inconsistent outcomes across candidate features and job contexts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge