Does deep learning always outperform simple linear regression in optical imaging?

Paper and Code

Oct 31, 2019

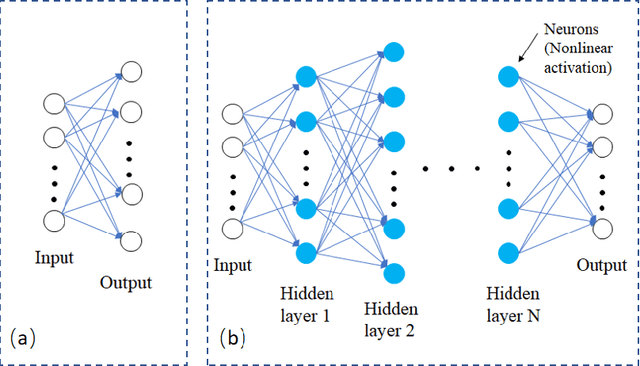

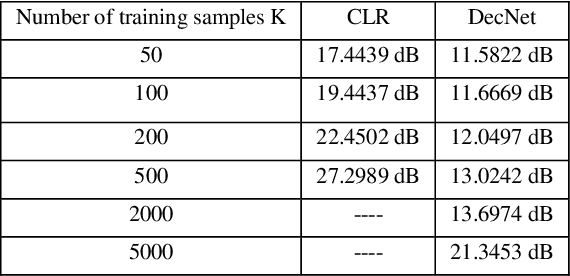

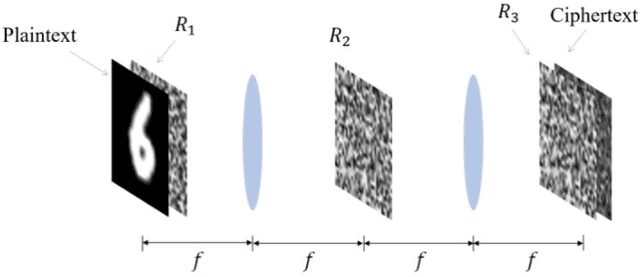

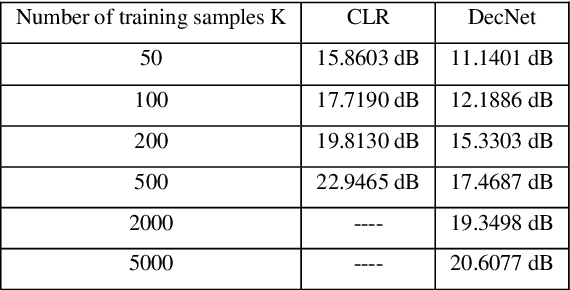

Deep learning has been extensively applied in many optical imaging applications in recent years. Despite the success, the limitations and drawbacks of deep learning in optical imaging have been seldom investigated. In this work, we show that conventional linear-regression-based methods can outperform the previously proposed deep learning approaches for two black-box optical imaging problems in some extent. Deep learning demonstrates its weakness especially when the number of training samples is small. The advantages and disadvantages of linear-regression-based methods and deep learning are analyzed and compared. Since many optical systems are essentially linear, a deep learning network containing many nonlinearity functions sometimes may not be the most suitable option.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge