Do Deep Neural Networks Forget Facial Action Units? -- Exploring the Effects of Transfer Learning in Health Related Facial Expression Recognition

Paper and Code

Apr 15, 2021

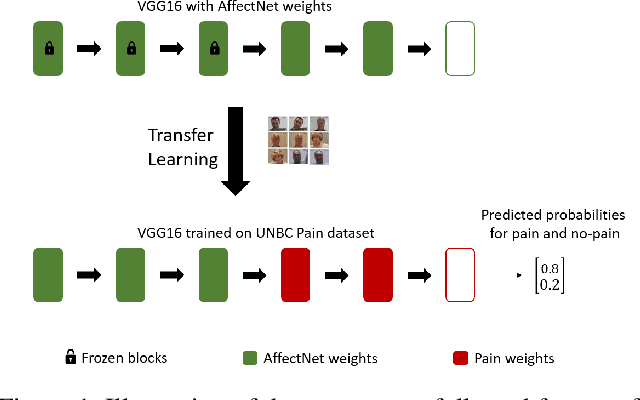

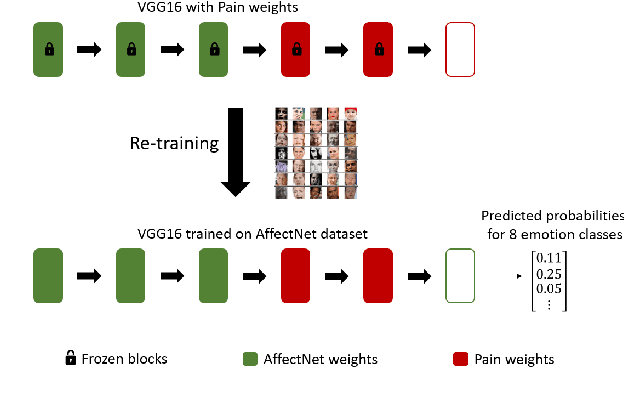

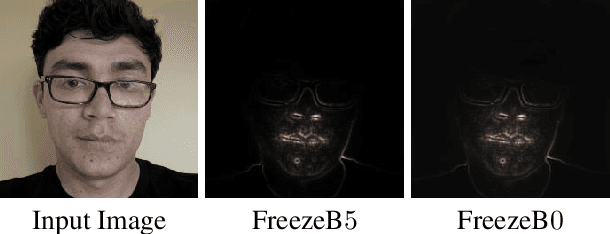

In this paper, we present a process to investigate the effects of transfer learning for automatic facial expression recognition from emotions to pain. To this end, we first train a VGG16 convolutional neural network to automatically discern between eight categorical emotions. We then fine-tune successively larger parts of this network to learn suitable representations for the task of automatic pain recognition. Subsequently, we apply those fine-tuned representations again to the original task of emotion recognition to further investigate the differences in performance between the models. In the second step, we use Layer-wise Relevance Propagation to analyze predictions of the model that have been predicted correctly previously but are now wrongly classified. Based on this analysis, we rely on the visual inspection of a human observer to generate hypotheses about what has been forgotten by the model. Finally, we test those hypotheses quantitatively utilizing concept embedding analysis methods. Our results show that the network, which was fully fine-tuned for pain recognition, indeed payed less attention to two action units that are relevant for expression recognition but not for pain recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge