DNN or $k$-NN: That is the Generalize vs. Memorize Question

Paper and Code

May 28, 2018

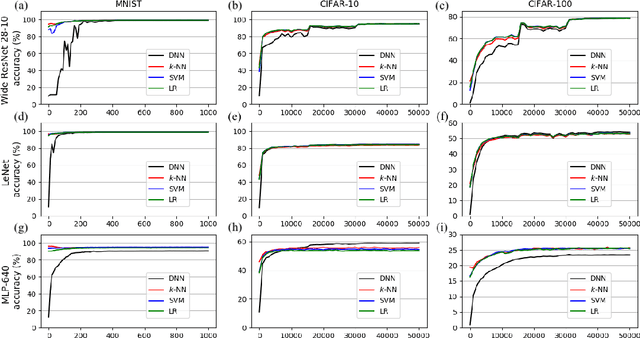

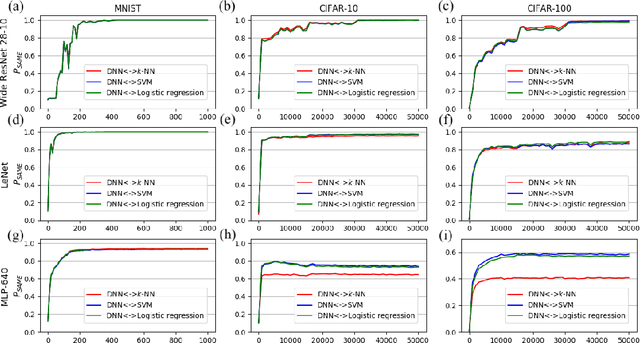

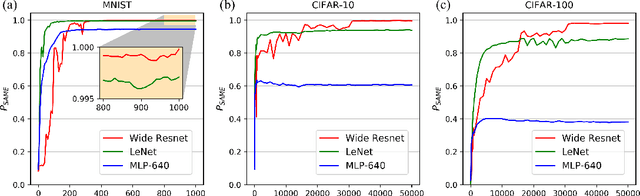

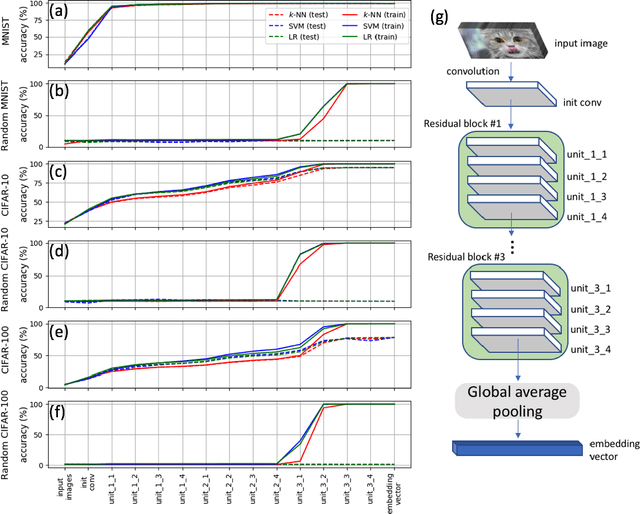

This paper studies the relationship between the classification performed by deep neural networks and the $k$-NN decision at the embedding space of these networks. This simple important connection shown here provides a better understanding of the relationship between the ability of neural networks to generalize and their tendency to memorize the training data, which are traditionally considered to be contradicting to each other and here shown to be compatible and complementary. Our results support the conjecture that deep neural networks approach Bayes optimal error rates.

* Paper is currently under review for NIPS 2018 conference

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge