DMODE: Differential Monocular Object Distance Estimation Module without Class Specific Information

Paper and Code

Oct 23, 2022

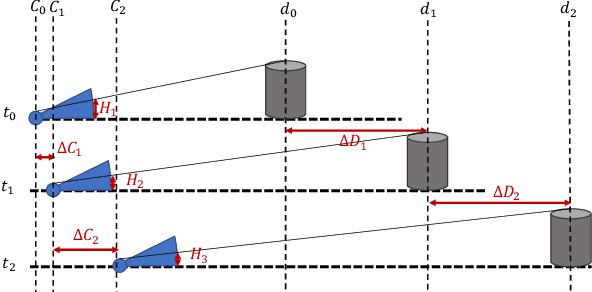

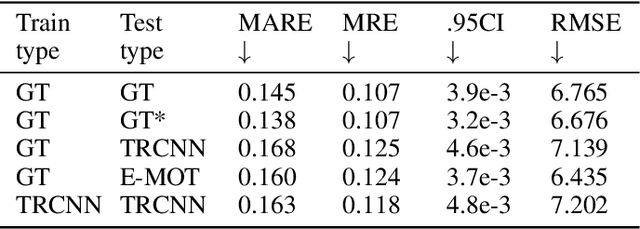

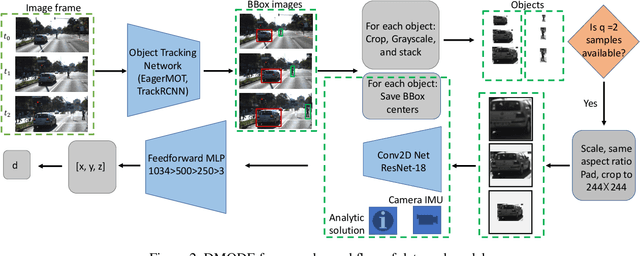

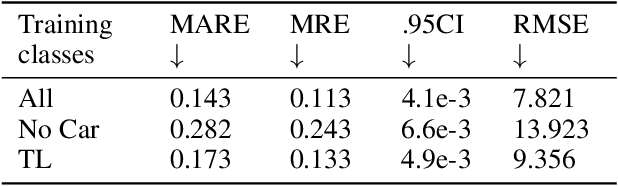

Using a single camera to estimate the distances of objects reduces costs compared to stereo-vision and LiDAR. Although monocular distance estimation has been studied in the literature, previous methods mostly rely on knowing an object's class in some way. This can result in deteriorated performance for dataset with multi-class objects and objects with an undefined class. In this paper, we aim to overcome the potential downsides of class-specific approaches, and provide an alternative technique called DMODE that does not require any information relating to its class. Using differential approaches, we combine the changes in an object's size over time together with the camera's motion to estimate the object's distance. Since DMODE is class agnostic method, it is easily adaptable to new environments. Therefore, it is able to maintain performance across different object detectors, and be easily adapted to new object classes. We tested our model across different scenarios of training and testing on the KITTI MOTS dataset's ground-truth bounding box annotations, and bounding box outputs of TrackRCNN and EagerMOT. The instantaneous change of bounding box sizes and camera position are then used to obtain an object's position in 3D without measuring its detection source or class properties. Our results show that we are able to outperform traditional alternatives methods e.g. IPM \cite{TuohyIPM}, SVR \cite{svr}, and \cite{zhu2019learning} in test environments with multi-class object distance detections.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge