DMCA: Dense Multi-agent Navigation using Attention and Communication

Paper and Code

Sep 28, 2022

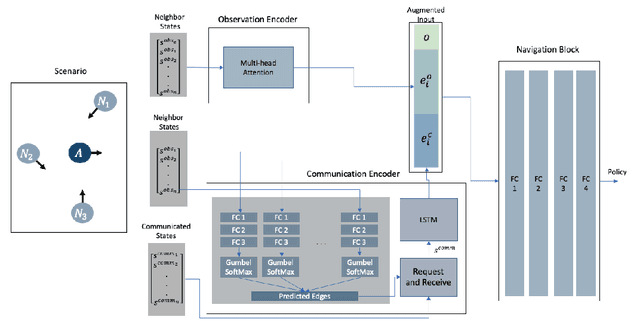

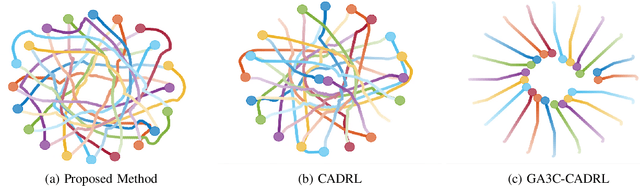

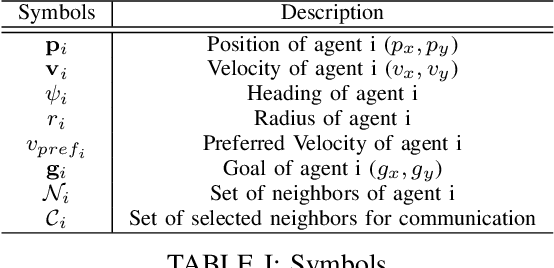

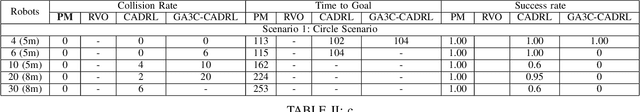

In decentralized multi-robot navigation, the agents lack the world knowledge to make safe and (near-)optimal plans reliably and make their decisions on their neighbors' observable states. We present a reinforcement learning based multi-agent navigation algorithm that performs inter-agent communications. In order to deal with the variable number of neighbors for each agent, we use a multi-head self-attention mechanism to encode neighbor information and create a fixed-length observation vector. We pose communication selection as a link prediction problem, where the network predicts whether communication is necessary given the observable information. The communicated information augments the observed neighbor information and is used to select a suitable navigation plan. We highlight the benefits of our approach by performing safe and efficient navigation among multiple robots in dense and challenging benchmarks. We also compare the performance with other learning-based methods and highlight improvements in terms of fewer collisions and time-to-goal in dense scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge