Distributionally Robust $k$-Nearest Neighbors for Few-Shot Learning

Paper and Code

Jun 07, 2020

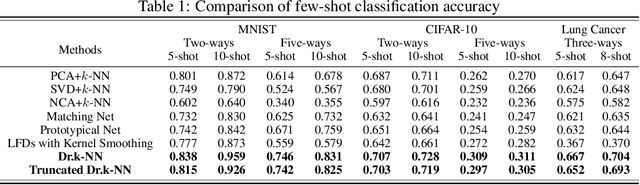

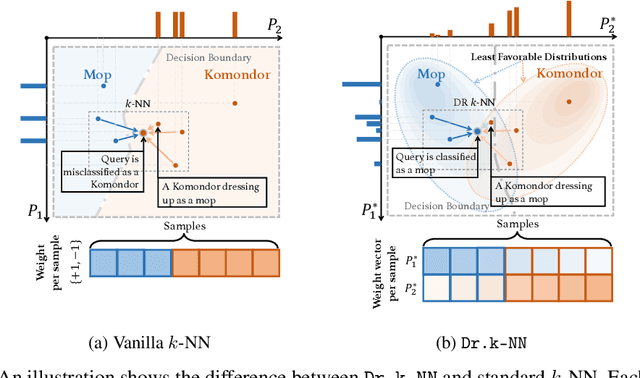

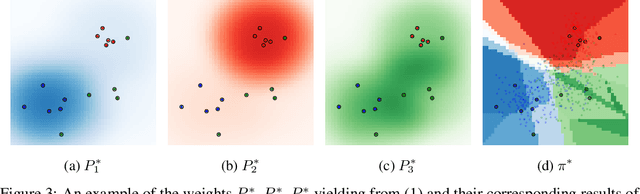

Learning a robust classifier from a few samples remains a key challenge in machine learning. A major thrust of research in few-shot classification has been based on metric learning to capture similarities between samples and then perform the $k$-nearest neighbor algorithm. To make such an algorithm more robust, in this paper, we propose a distributionally robust $k$-nearest neighbor algorithm Dr.k-NN, which features assigning minimax optimal weights to training samples when performing classification. We also couple it with neural-network-based feature embedding. We demonstrate the competitive performance of our algorithm comparing to the state-of-the-art in the few-shot learning setting with various real-data experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge