Distributed Online Learning for Joint Regret with Communication Constraints

Paper and Code

Feb 15, 2021

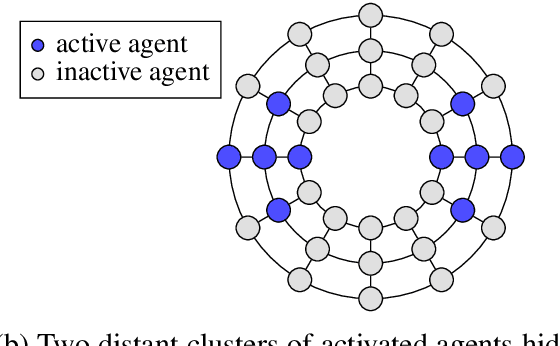

In this paper we consider a distributed online learning setting for joint regret with communication constraints. This is a multi-agent setting in which in each round $t$ an adversary activates an agent, which has to issue a prediction. A subset of all the agents may then communicate a $b$-bit message to their neighbors in a graph. All agents cooperate to control the joint regret, which is the sum of the losses of the agents minus the losses evaluated at the best fixed common comparator parameters $\pmb{u}$. We provide a comparator-adaptive algorithm for this setting, which means that the joint regret scales with the norm of the comparator $\|\pmb{u}\|$. To address communication constraints we provide deterministic and stochastic gradient compression schemes and show that with these compression schemes our algorithm has worst-case optimal regret for the case that all agents communicate in every round. Additionally, we exploit the comparator-adaptive property of our algorithm to learn the best partition from a set of candidate partitions, which allows different subsets of agents to learn a different comparator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge