Distributed Learning on Heterogeneous Resource-Constrained Devices

Paper and Code

Jun 09, 2020

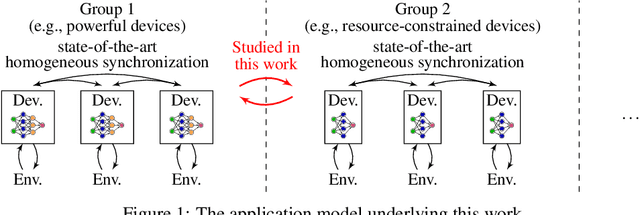

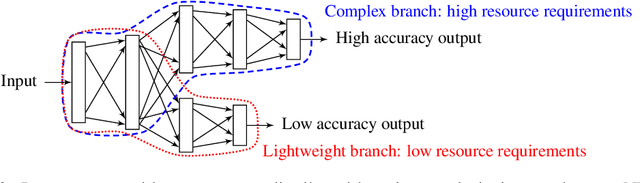

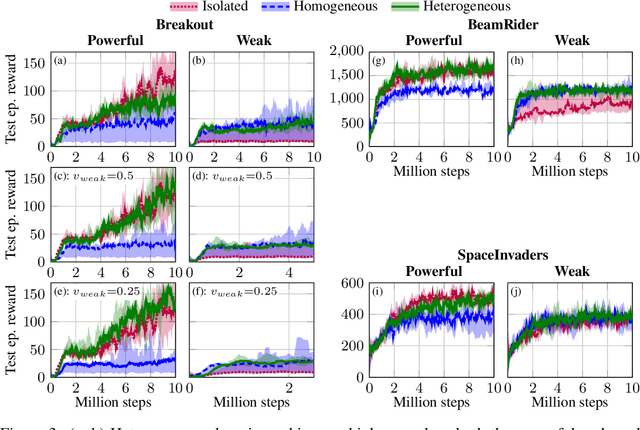

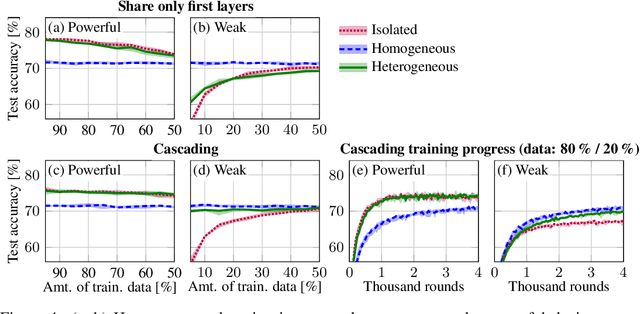

We consider a distributed system, consisting of a heterogeneous set of devices, ranging from low-end to high-end. These devices have different profiles, e.g., different energy budgets, or different hardware specifications, determining their capabilities on performing certain learning tasks. We propose the first approach that enables distributed learning in such a heterogeneous system. Applying our approach, each device employs a neural network (NN) with a topology that fits its capabilities; however, part of these NNs share the same topology, so that their parameters can be jointly learned. This differs from current approaches, such as federated learning, which require all devices to employ the same NN, enforcing a trade-off between achievable accuracy and computational overhead of training. We evaluate heterogeneous distributed learning for reinforcement learning (RL) and observe that it greatly improves the achievable reward on more powerful devices, compared to current approaches, while still maintaining a high reward on the weaker devices. We also explore supervised learning, observing similar gains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge