Distance Sampling-based Paraphraser Leveraging ChatGPT for Text Data Manipulation

Paper and Code

May 01, 2024

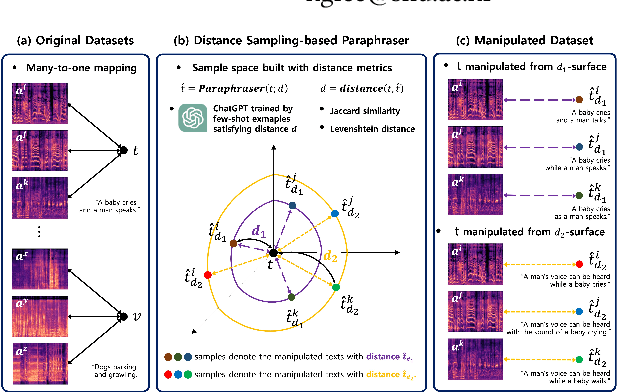

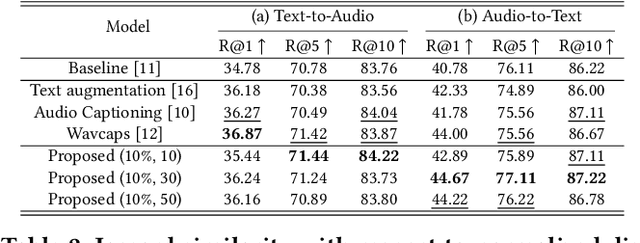

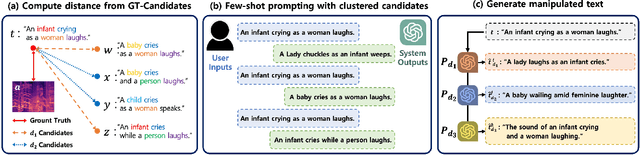

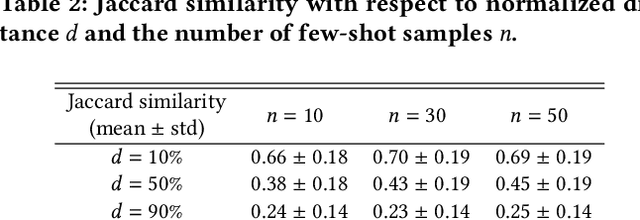

There has been growing interest in audio-language retrieval research, where the objective is to establish the correlation between audio and text modalities. However, most audio-text paired datasets often lack rich expression of the text data compared to the audio samples. One of the significant challenges facing audio-text datasets is the presence of similar or identical captions despite different audio samples. Therefore, under many-to-one mapping conditions, audio-text datasets lead to poor performance of retrieval tasks. In this paper, we propose a novel approach to tackle the data imbalance problem in audio-language retrieval task. To overcome the limitation, we introduce a method that employs a distance sampling-based paraphraser leveraging ChatGPT, utilizing distance function to generate a controllable distribution of manipulated text data. For a set of sentences with the same context, the distance is used to calculate a degree of manipulation for any two sentences, and ChatGPT's few-shot prompting is performed using a text cluster with a similar distance defined by the Jaccard similarity. Therefore, ChatGPT, when applied to few-shot prompting with text clusters, can adjust the diversity of the manipulated text based on the distance. The proposed approach is shown to significantly enhance performance in audio-text retrieval, outperforming conventional text augmentation techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge