Discriminative and Generative Models for Anatomical Shape Analysison Point Clouds with Deep Neural Networks

Paper and Code

Oct 02, 2020

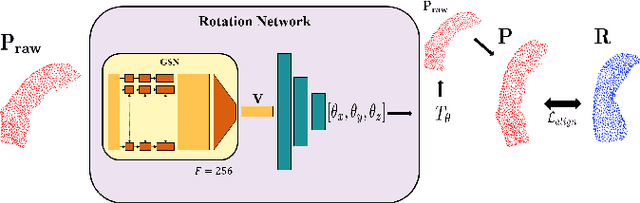

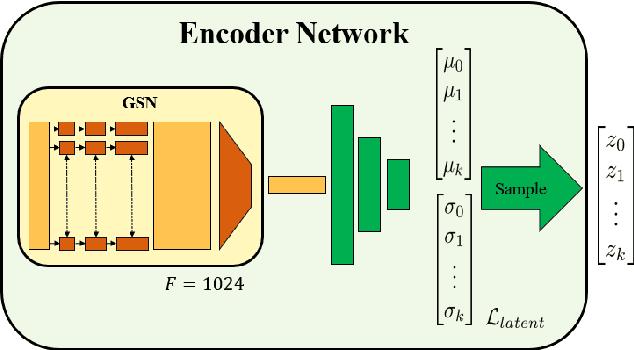

We introduce deep neural networks for the analysis of anatomical shapes that learn a low-dimensional shape representation from the given task, instead of relying on hand-engineered representations. Our framework is modular and consists of several computing blocks that perform fundamental shape processing tasks. The networks operate on unordered point clouds and provide invariance to similarity transformations, avoiding the need to identify point correspondences between shapes. Based on the framework, we assemble a discriminative model for disease classification and age regression, as well as a generative model for the accruate reconstruction of shapes. In particular, we propose a conditional generative model, where the condition vector provides a mechanism to control the generative process. instance, it enables to assess shape variations specific to a particular diagnosis, when passing it as side information. Next to working on single shapes, we introduce an extension for the joint analysis of multiple anatomical structures, where the simultaneous modeling of multiple structures can lead to a more compact encoding and a better understanding of disorders. We demonstrate the advantages of our framework in comprehensive experiments on real and synthetic data. The key insights are that (i) learning a shape representation specific to the given task yields higher performance than alternative shape descriptors, (ii) multi-structure analysis is both more efficient and more accurate than single-structure analysis, and (iii) point clouds generated by our model capture morphological differences associated to Alzheimers disease, to the point that they can be used to train a discriminative model for disease classification. Our framework naturally scales to the analysis of large datasets, giving it the potential to learn characteristic variations in large populations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge