Discrete Event, Continuous Time RNNs

Paper and Code

Oct 11, 2017

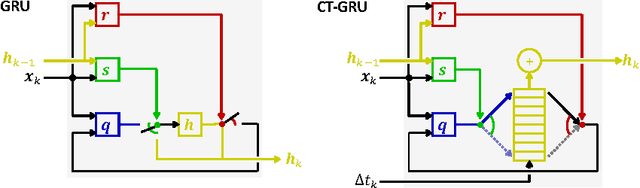

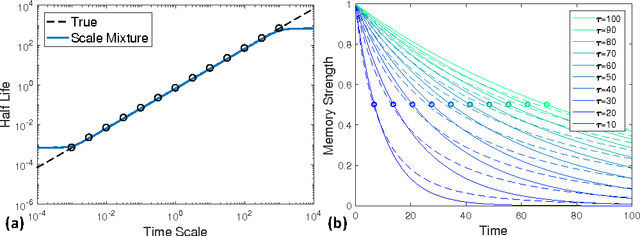

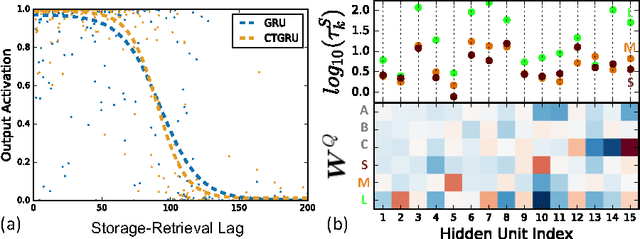

We investigate recurrent neural network architectures for event-sequence processing. Event sequences, characterized by discrete observations stamped with continuous-valued times of occurrence, are challenging due to the potentially wide dynamic range of relevant time scales as well as interactions between time scales. We describe four forms of inductive bias that should benefit architectures for event sequences: temporal locality, position and scale homogeneity, and scale interdependence. We extend the popular gated recurrent unit (GRU) architecture to incorporate these biases via intrinsic temporal dynamics, obtaining a continuous-time GRU. The CT-GRU arises by interpreting the gates of a GRU as selecting a time scale of memory, and the CT-GRU generalizes the GRU by incorporating multiple time scales of memory and performing context-dependent selection of time scales for information storage and retrieval. Event time-stamps drive decay dynamics of the CT-GRU, whereas they serve as generic additional inputs to the GRU. Despite the very different manner in which the two models consider time, their performance on eleven data sets we examined is essentially identical. Our surprising results point both to the robustness of GRU and LSTM architectures for handling continuous time, and to the potency of incorporating continuous dynamics into neural architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge