Discovering Latent Classes for Semi-Supervised Semantic Segmentation

Paper and Code

Dec 30, 2019

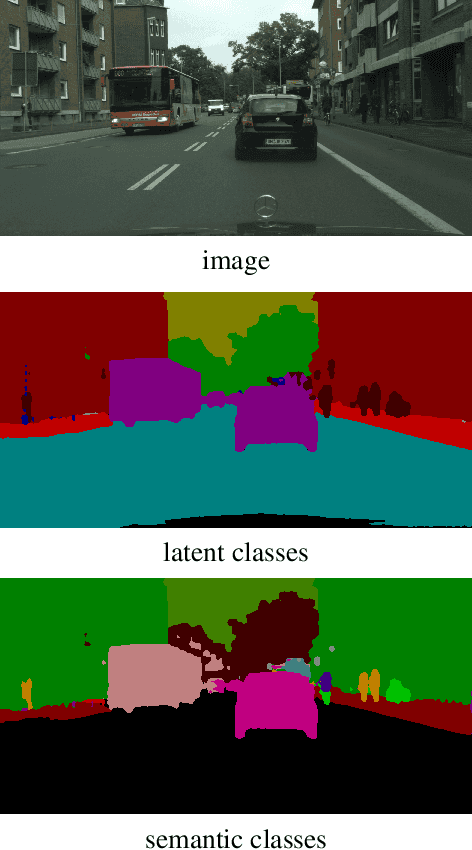

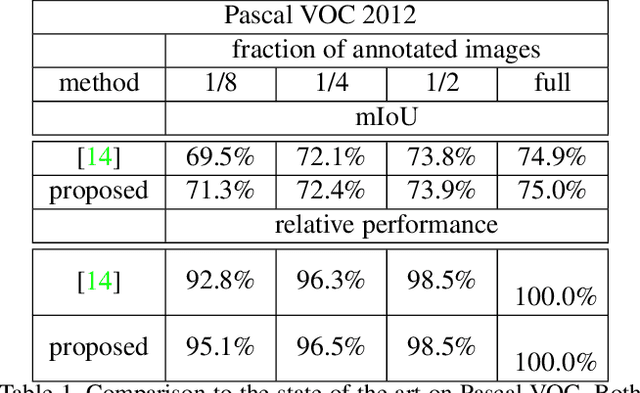

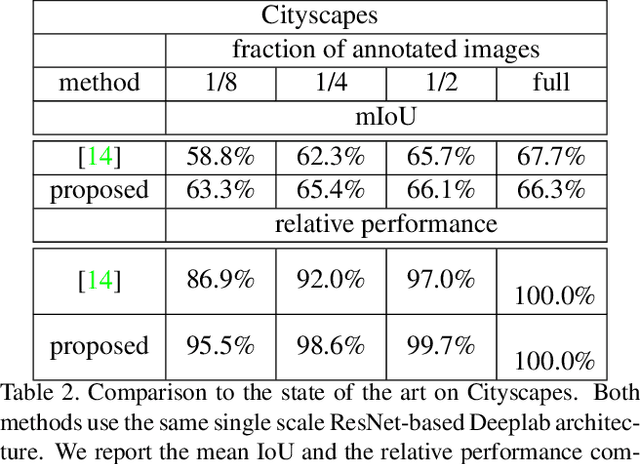

High annotation costs are a major bottleneck for the training of semantic segmentation systems. Therefore, methods working with less annotation effort are of special interest. This paper studies the problem of semi-supervised semantic segmentation. This means that only a small subset of the training images is annotated while the other training images do not contain any annotation. In order to leverage the information present in the unlabeled images, we propose to learn a second task that is related to semantic segmentation but easier. On labeled images, we learn latent classes consistent with semantic classes so that the variety of semantic classes assigned to a latent class is as low as possible. On unlabeled images, we predict a probability map for latent classes and use it as a supervision signal to learn semantic segmentation. The latent classes, as well as the semantic classes, are simultaneously predicted by a two-branch network. In our experiments on Pascal VOC and Cityscapes, we show that the latent classes learned this way have an intuitive meaning and that the proposed method achieves state of the art results for semi-supervised semantic segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge