Dimensionality Reduction and Wasserstein Stability for Kernel Regression

Paper and Code

Mar 17, 2022

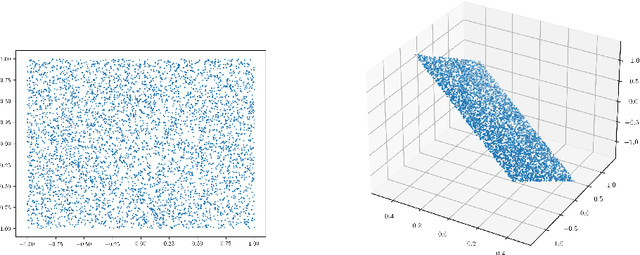

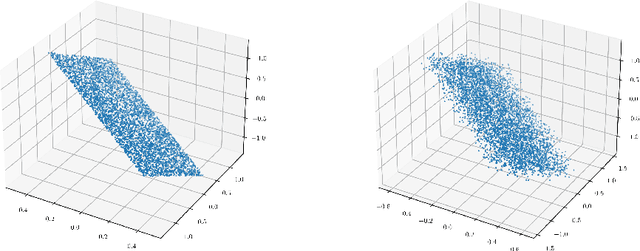

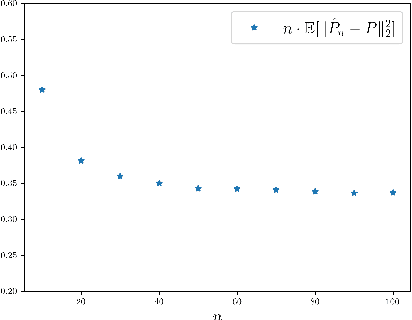

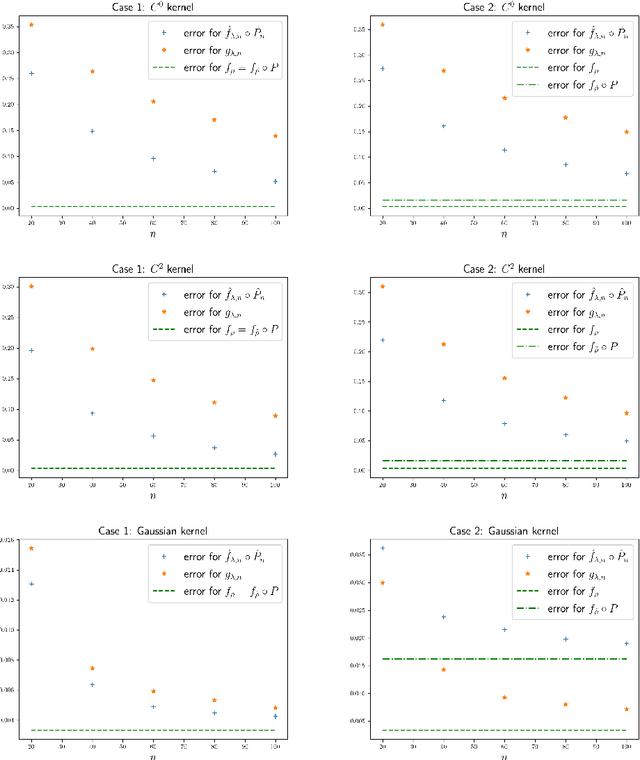

In a high-dimensional regression framework, we study consequences of the naive two-step procedure where first the dimension of the input variables is reduced and second, the reduced input variables are used to predict the output variable. More specifically we combine principal component analysis (PCA) with kernel regression. In order to analyze the resulting regression errors, a novel stability result of kernel regression with respect to the Wasserstein distance is derived. This allows us to bound errors that occur when perturbed input data is used to fit a kernel function. We combine the stability result with known estimates from the literature on both principal component analysis and kernel regression to obtain convergence rates for the two-step procedure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge