Diffusion Probabilistic Modeling for Video Generation

Paper and Code

Mar 31, 2022

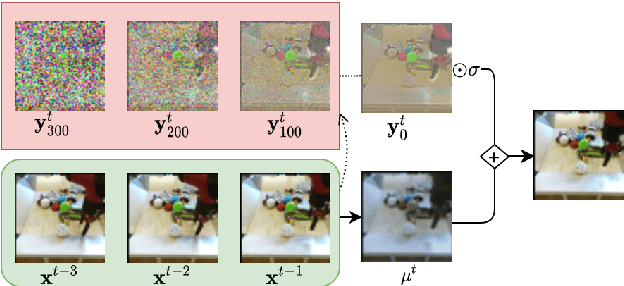

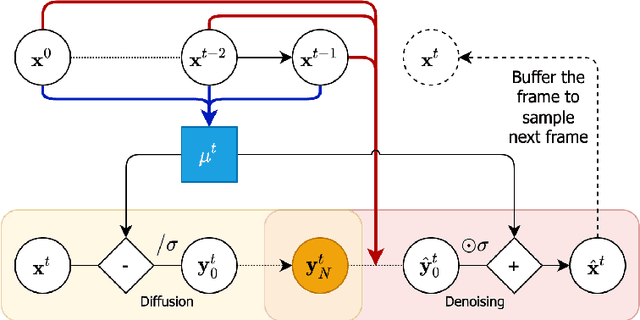

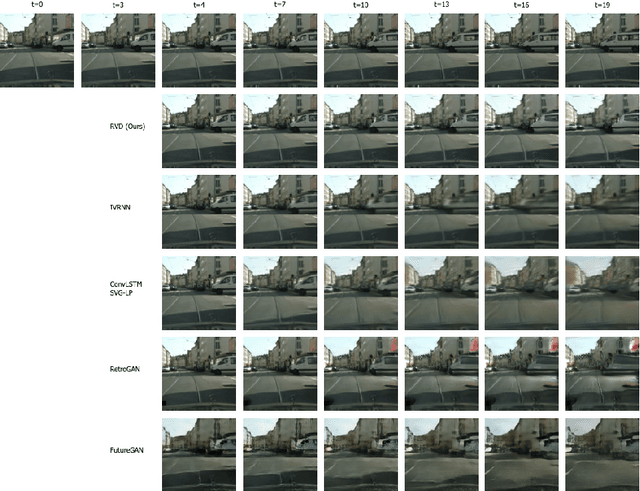

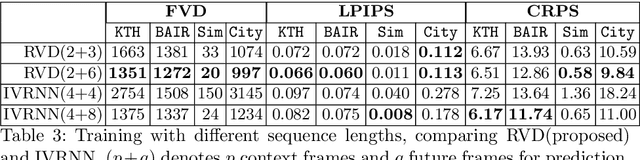

Denoising diffusion probabilistic models are a promising new class of generative models that are competitive with GANs on perceptual metrics. In this paper, we explore their potential for sequentially generating video. Inspired by recent advances in neural video compression, we use denoising diffusion models to stochastically generate a residual to a deterministic next-frame prediction. We compare this approach to two sequential VAE and two GAN baselines on four datasets, where we test the generated frames for perceptual quality and forecasting accuracy against ground truth frames. We find significant improvements in terms of perceptual quality on all data and improvements in terms of frame forecasting for complex high-resolution videos.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge