Differentially Private Coordinate Descent for Composite Empirical Risk Minimization

Paper and Code

Oct 22, 2021

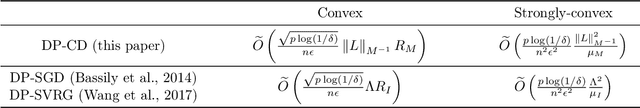

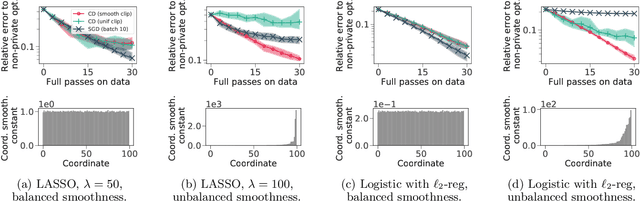

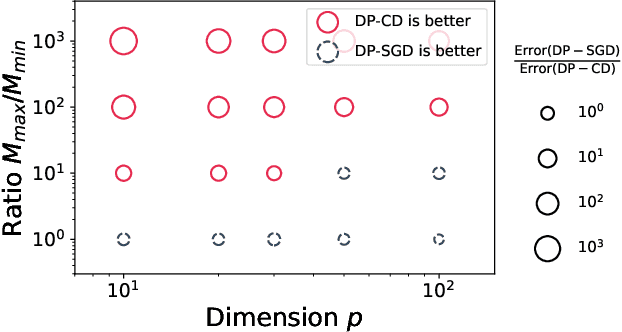

Machine learning models can leak information about the data used to train them. Differentially Private (DP) variants of optimization algorithms like Stochastic Gradient Descent (DP-SGD) have been designed to mitigate this, inducing a trade-off between privacy and utility. In this paper, we propose a new method for composite Differentially Private Empirical Risk Minimization (DP-ERM): Differentially Private proximal Coordinate Descent (DP-CD). We analyze its utility through a novel theoretical analysis of inexact coordinate descent, and highlight some regimes where DP-CD outperforms DP-SGD, thanks to the possibility of using larger step sizes. We also prove new lower bounds for composite DP-ERM under coordinate-wise regularity assumptions, that are, in some settings, nearly matched by our algorithm. In practical implementations, the coordinate-wise nature of DP-CD updates demands special care in choosing the clipping thresholds used to bound individual contributions to the gradients. A natural parameterization of these thresholds emerges from our theory, limiting the addition of unnecessarily large noise without requiring coordinate-wise hyperparameter tuning or extra computational cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge