Differentiable-Optimization Based Neural Policy for Occlusion-Aware Target Tracking

Paper and Code

Jun 20, 2024

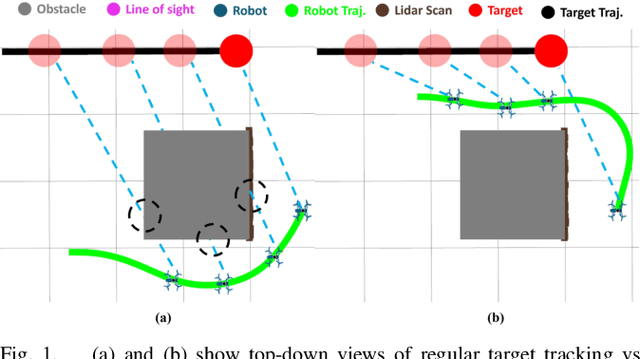

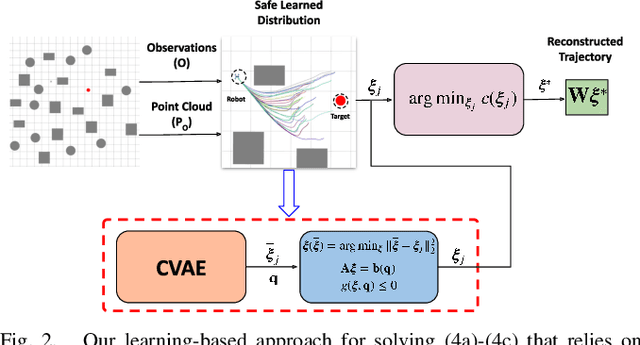

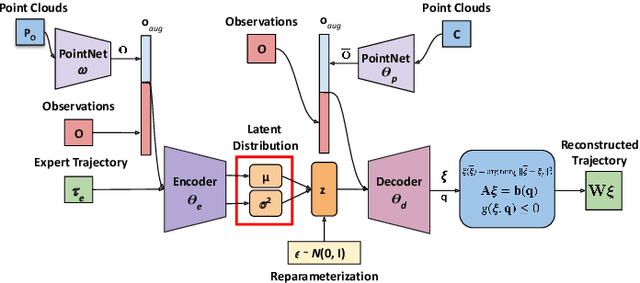

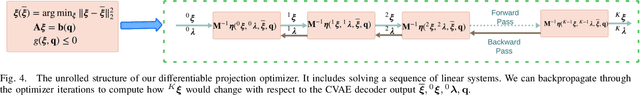

Tracking a target in cluttered and dynamic environments is challenging but forms a core component in applications like aerial cinematography. The obstacles in the environment not only pose collision risk but can also occlude the target from the field-of-view of the robot. Moreover, the target future trajectory may be unknown and only its current state can be estimated. In this paper, we propose a learned probabilistic neural policy for safe, occlusion-free target tracking. The core novelty of our work stems from the structure of our policy network that combines generative modeling based on Conditional Variational Autoencoder (CVAE) with differentiable optimization layers. The role of the CVAE is to provide a base trajectory distribution which is then projected onto a learned feasible set through the optimization layer. Furthermore, both the weights of the CVAE network and the parameters of the differentiable optimization can be learned in an end-to-end fashion through demonstration trajectories. We improve the state-of-the-art (SOTA) in the following respects. We show that our learned policy outperforms existing SOTA in terms of occlusion/collision avoidance capabilities and computation time. Second, we present an extensive ablation showing how different components of our learning pipeline contribute to the overall tracking task. We also demonstrate the real-time performance of our approach on resource-constrained hardware such as NVIDIA Jetson TX2. Finally, our learned policy can also be viewed as a reactive planner for navigation in highly cluttered environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge