Differentiable Network Adaption with Elastic Search Space

Paper and Code

Mar 30, 2021

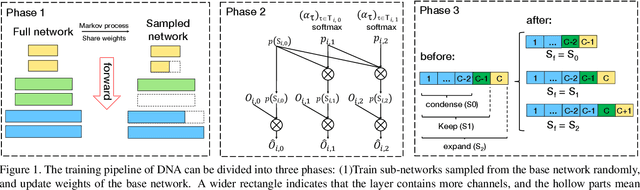

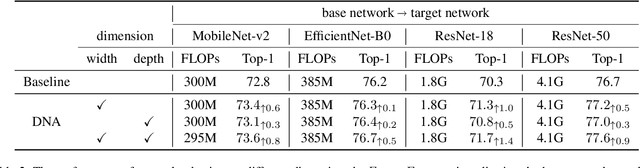

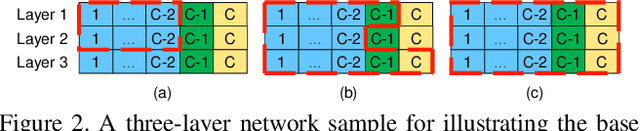

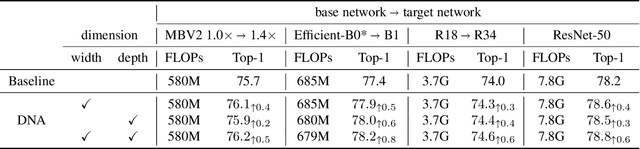

In this paper we propose a novel network adaption method called Differentiable Network Adaption (DNA), which can adapt an existing network to a specific computation budget by adjusting the width and depth in a differentiable manner. The gradient-based optimization allows DNA to achieve an automatic optimization of width and depth rather than previous heuristic methods that heavily rely on human priors. Moreover, we propose a new elastic search space that can flexibly condense or expand during the optimization process, allowing the network optimization of width and depth in a bi-direction manner. By DNA, we successfully achieve network architecture optimization by condensing and expanding in both width and depth dimensions. Extensive experiments on ImageNet demonstrate that DNA can adapt the existing network to meet different targeted computation requirements with better performance than previous methods. What's more, DNA can further improve the performance of high-accuracy networks obtained by state-of-the-art neural architecture search methods such as EfficientNet and MobileNet-v3.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge