Diagnostic Tool for Out-of-Sample Model Evaluation

Paper and Code

Jun 22, 2022

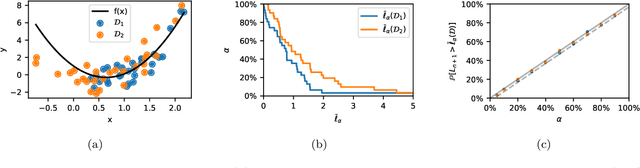

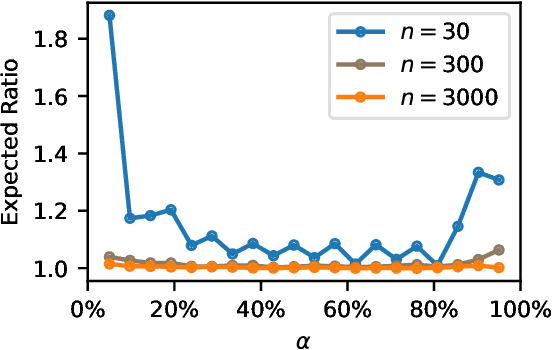

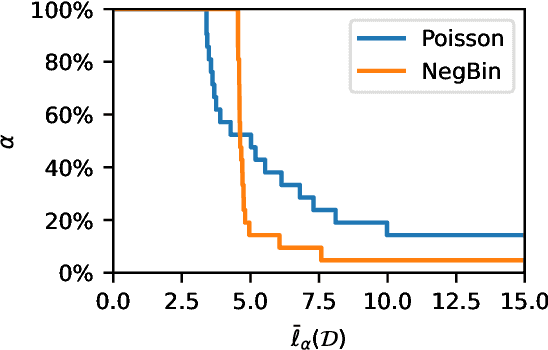

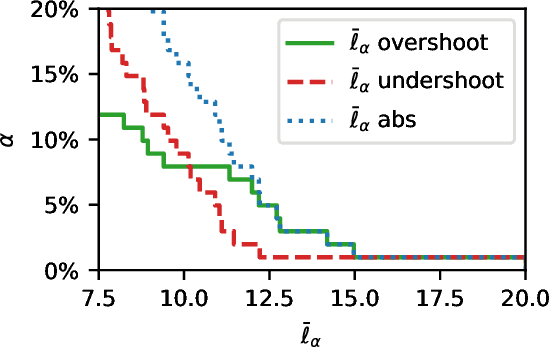

Assessment of model fitness is an important step in many problems. Models are typically fitted to training data by minimizing a loss function, such as the squared-error or negative log-likelihood, and it is natural to desire low losses on future data. This letter considers the use of a test data set to characterize the out-of-sample losses of a model. We propose a simple model diagnostic tool that provides finite-sample guarantees under weak assumptions. The tool is computationally efficient and can be interpreted as an empirical quantile. Several numerical experiments are presented to show how the proposed method quantifies the impact of distribution shifts, aids the analysis of regression, and enables model selection as well as hyper-parameter tuning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge