Detecting Model Misspecification in Amortized Bayesian Inference with Neural Networks: An Extended Investigation

Paper and Code

Jun 06, 2024

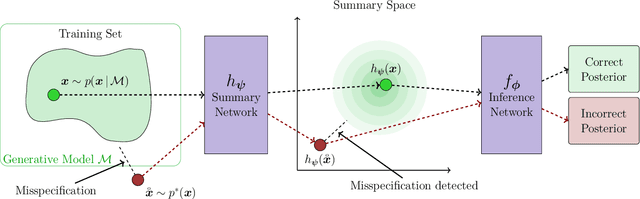

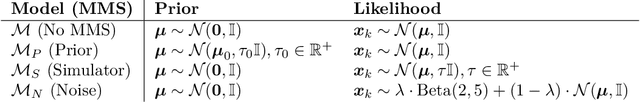

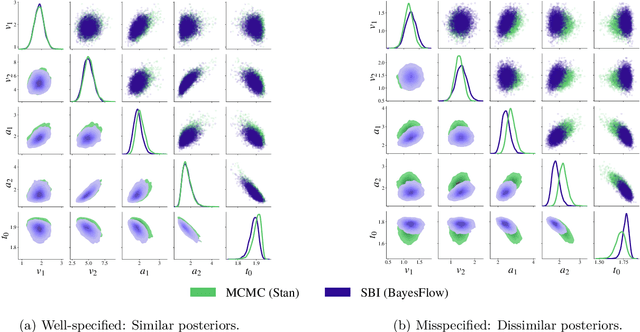

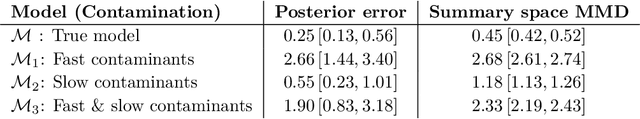

Recent advances in probabilistic deep learning enable efficient amortized Bayesian inference in settings where the likelihood function is only implicitly defined by a simulation program (simulation-based inference; SBI). But how faithful is such inference if the simulation represents reality somewhat inaccurately, that is, if the true system behavior at test time deviates from the one seen during training? We conceptualize the types of such model misspecification arising in SBI and systematically investigate how the performance of neural posterior approximators gradually deteriorates as a consequence, making inference results less and less trustworthy. To notify users about this problem, we propose a new misspecification measure that can be trained in an unsupervised fashion (i.e., without training data from the true distribution) and reliably detects model misspecification at test time. Our experiments clearly demonstrate the utility of our new measure both on toy examples with an analytical ground-truth and on representative scientific tasks in cell biology, cognitive decision making, disease outbreak dynamics, and computer vision. We show how the proposed misspecification test warns users about suspicious outputs, raises an alarm when predictions are not trustworthy, and guides model designers in their search for better simulators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge