Detecting and Adapting to Novelty in Games

Paper and Code

Jun 04, 2021

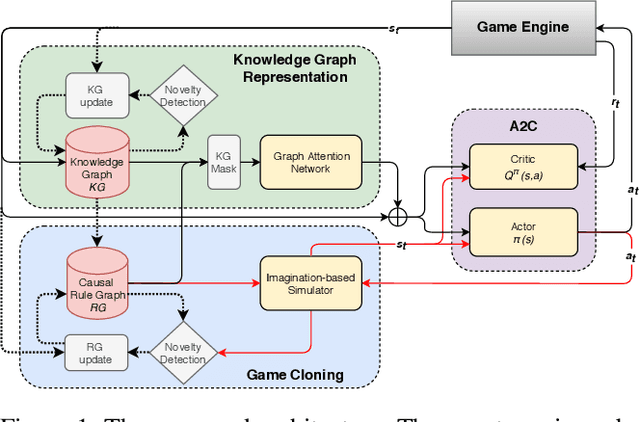

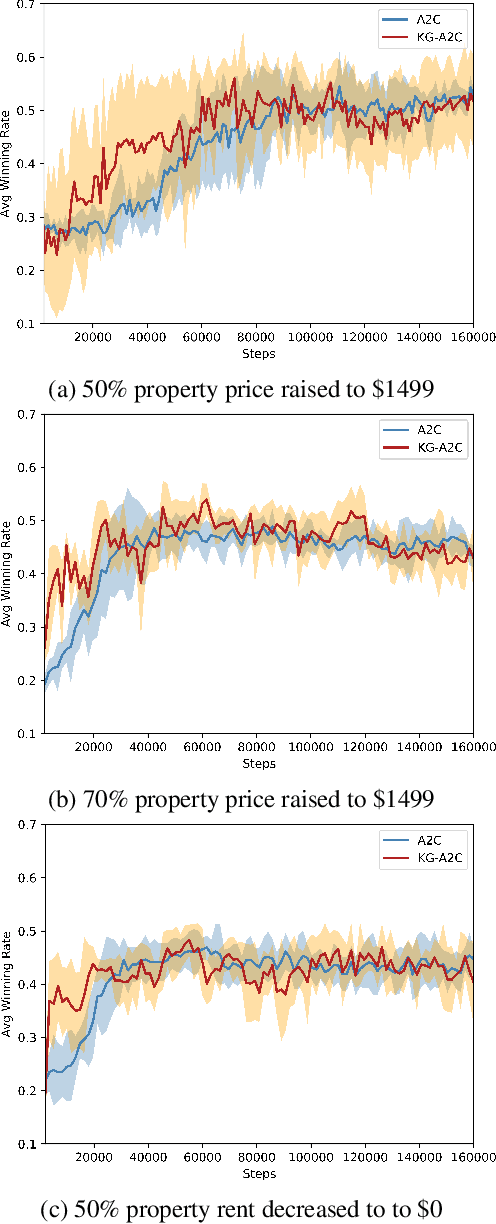

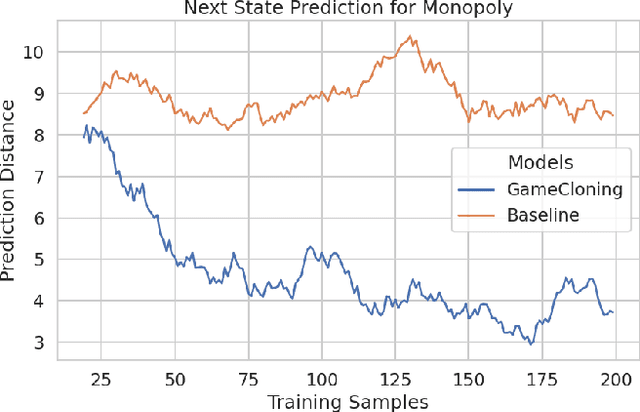

Open-world novelty occurs when the rules of an environment can change abruptly, such as when a game player encounters "house rules". To address open-world novelty, game playing agents must be able to detect when novelty is injected, and to quickly adapt to the new rules. We propose a model-based reinforcement learning approach where game state and rules are represented as knowledge graphs. The knowledge graph representation of the state and rules allows novelty to be detected as changes in the knowledge graph, assists with the training of deep reinforcement learners, and enables imagination-based re-training where the agent uses the knowledge graph to perform look-ahead.

* 10 pages, 5 figures, Accepted to the AAAI21 Workshop on on

Reinforcement Learning in Games

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge