DeHIN: A Decentralized Framework for Embedding Large-scale Heterogeneous Information Networks

Paper and Code

Jan 08, 2022

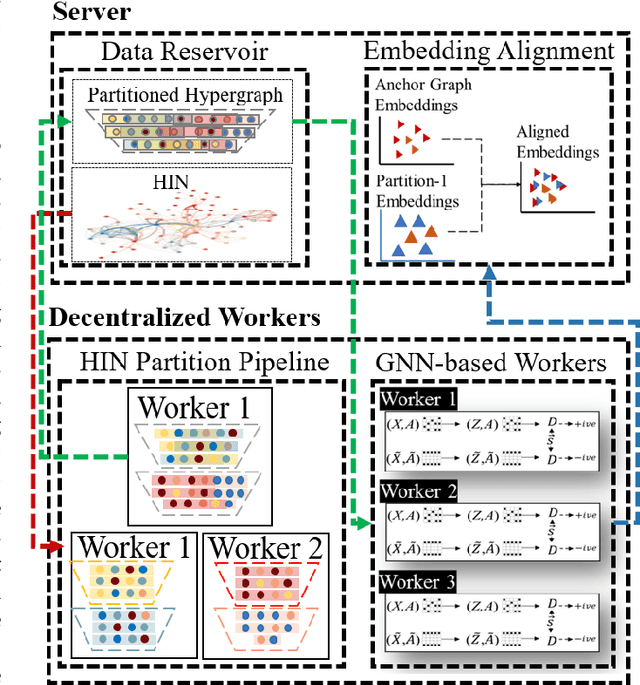

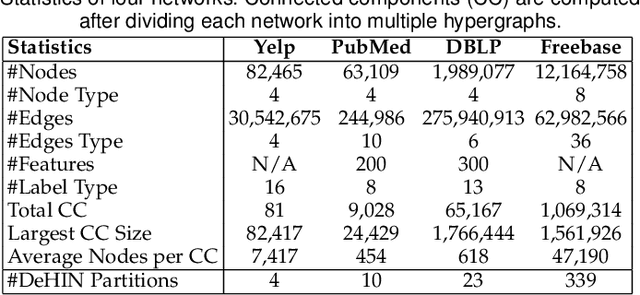

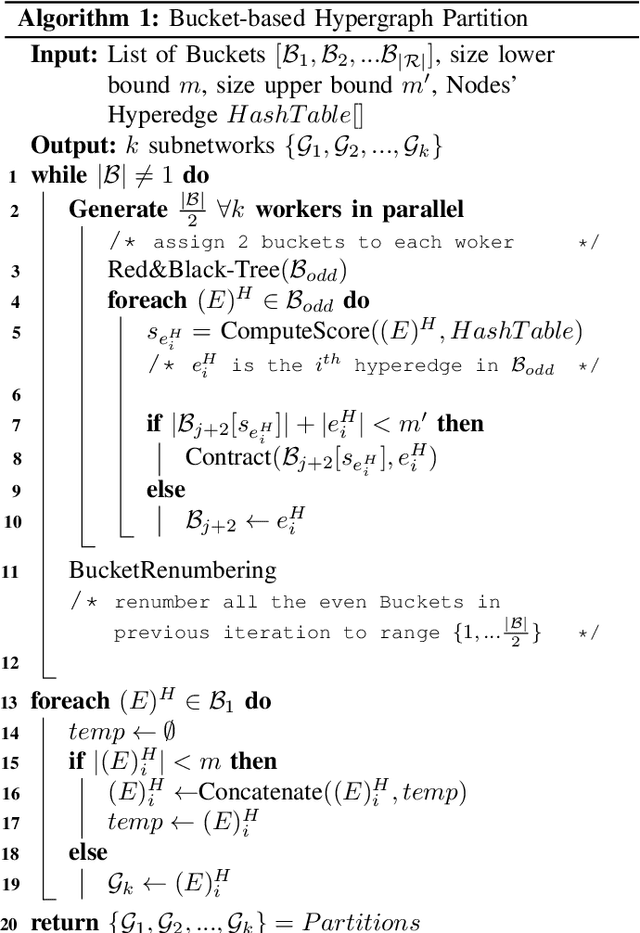

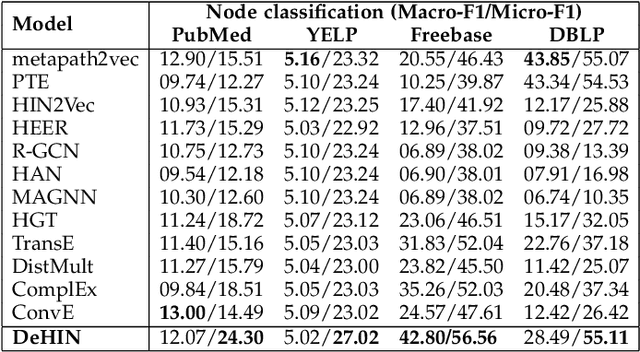

Modeling heterogeneity by extraction and exploitation of high-order information from heterogeneous information networks (HINs) has been attracting immense research attention in recent times. Such heterogeneous network embedding (HNE) methods effectively harness the heterogeneity of small-scale HINs. However, in the real world, the size of HINs grow exponentially with the continuous introduction of new nodes and different types of links, making it a billion-scale network. Learning node embeddings on such HINs creates a performance bottleneck for existing HNE methods that are commonly centralized, i.e., complete data and the model are both on a single machine. To address large-scale HNE tasks with strong efficiency and effectiveness guarantee, we present \textit{Decentralized Embedding Framework for Heterogeneous Information Network} (DeHIN) in this paper. In DeHIN, we generate a distributed parallel pipeline that utilizes hypergraphs in order to infuse parallelization into the HNE task. DeHIN presents a context preserving partition mechanism that innovatively formulates a large HIN as a hypergraph, whose hyperedges connect semantically similar nodes. Our framework then adopts a decentralized strategy to efficiently partition HINs by adopting a tree-like pipeline. Then, each resulting subnetwork is assigned to a distributed worker, which employs the deep information maximization theorem to locally learn node embeddings from the partition it receives. We further devise a novel embedding alignment scheme to precisely project independently learned node embeddings from all subnetworks onto a common vector space, thus allowing for downstream tasks like link prediction and node classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge