deepSELF: An Open Source Deep Self End-to-End Learning Framework

Paper and Code

May 11, 2020

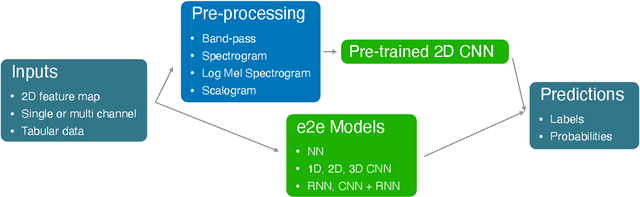

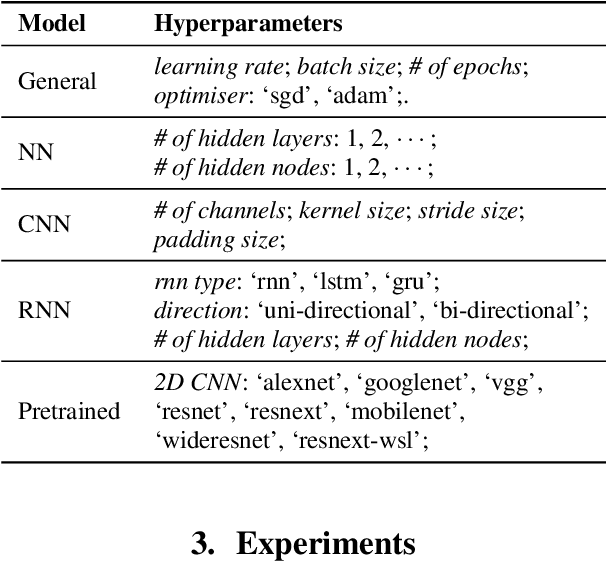

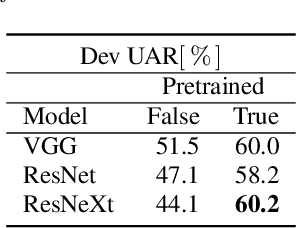

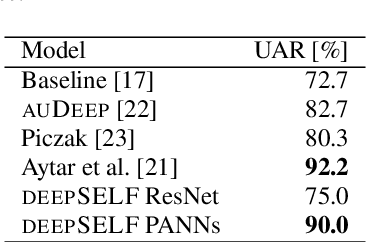

We introduce an open-source toolkit, i.e., the deep Self End-to-end Learning Framework (deepSELF), as a toolkit of deep self end-to-end learning framework for multi-modal signals. To the best of our knowledge, it is the first public toolkit assembling a series of state-of-the-art deep learning technologies. Highlights of the proposed deepSELF toolkit include: First, it can be used to analyse a variety of multi-modal signals, including images, audio, and single or multi-channel sensor data. Second, we provide multiple options for pre-processing, e.g., filtering, or spectrum image generation by Fourier or wavelet transformation. Third, plenty of topologies in terms of NN, 1D/2D/3D CNN, and RNN/LSTM/GRU can be customised and a series of pretrained 2D CNN models, e.g., AlexNet, VGGNet, ResNet can be used easily. Last but not least, above these features, deepSELF can be flexibly used not only as a single model but also as a fusion of such.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge