Deepfake Detection: A Comprehensive Study from the Reliability Perspective

Paper and Code

Nov 20, 2022

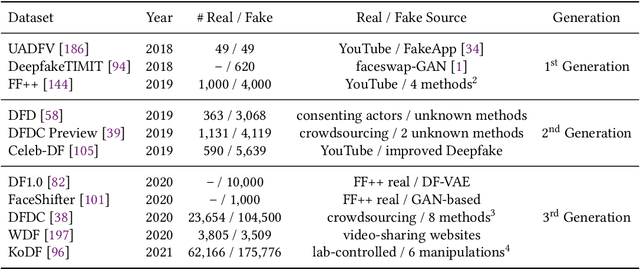

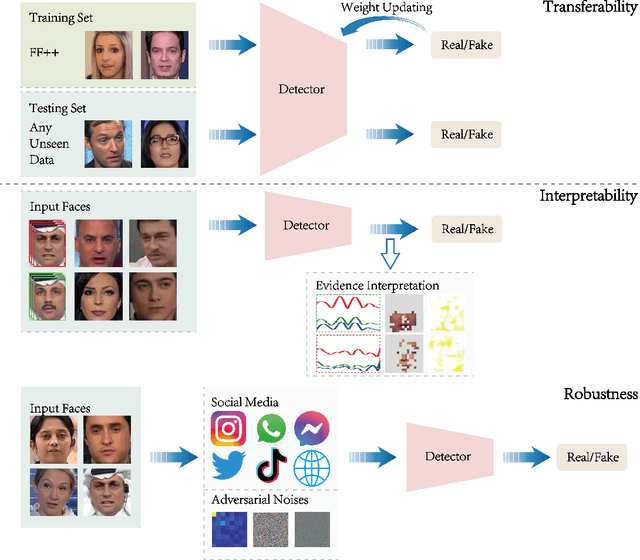

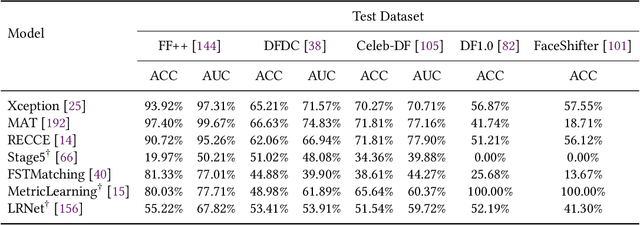

The mushroomed Deepfake synthetic materials circulated on the internet have raised serious social impact to politicians, celebrities, and every human being on earth. In this paper, we provide a thorough review of the existing models following the development history of the Deepfake detection studies and define the research challenges of Deepfake detection in three aspects, namely, transferability, interpretability, and reliability. While the transferability and interpretability challenges have both been frequently discussed and attempted to solve with quantitative evaluations, the reliability issue has been barely considered, leading to the lack of reliable evidence in real-life usages and even for prosecutions on Deepfake related cases in court. We therefore conduct a model reliability study scheme using statistical random sampling knowledge and the publicly available benchmark datasets to qualitatively validate the detection performance of the existing models on arbitrary Deepfake candidate suspects. A barely remarked systematic data pre-processing procedure is demonstrated along with the fair training and testing experiments on the existing detection models. Case studies are further executed to justify the real-life Deepfake cases including different groups of victims with the help of reliably qualified detection models. The model reliability study provides a workflow for the detection models to act as or assist evidence for Deepfake forensic investigation in court once approved by authentication experts or institutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge