Deep Transfer Reinforcement Learning for Text Summarization

Paper and Code

Oct 15, 2018

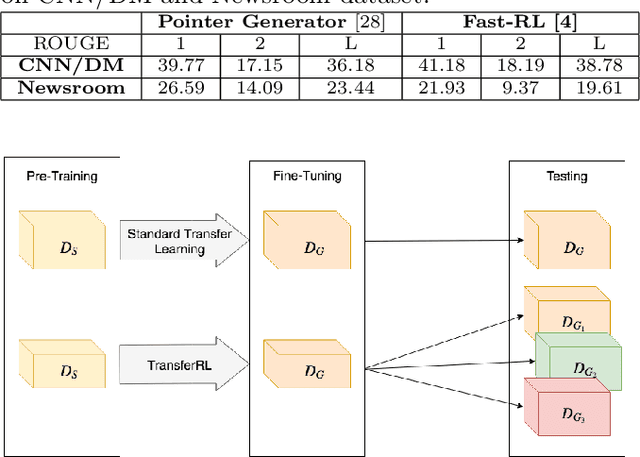

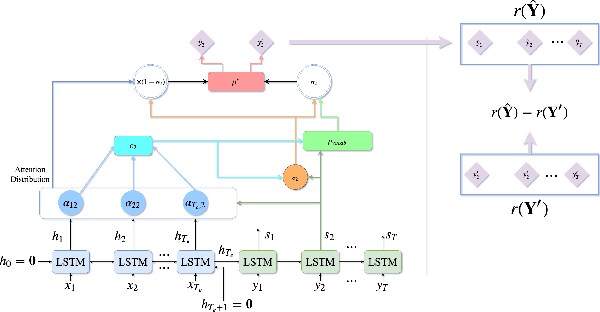

Deep neural networks are data hungry models and thus they face difficulties when used for training on small size data. Transfer learning is a method that could potentially help in such situations. Although transfer learning achieved great success in image processing, its effect in the text domain is yet to be well established especially due to several intricacies that arise in the context of document analysis and understanding. In this paper, we study the problem of transfer learning for text summarization and discuss why the existing state-of-the-art models for this problem fail to generalize well on other (unseen) datasets. We propose a reinforcement learning framework based on self-critic policy gradient method which solves this problem and achieves good generalization and state-of-the-art results on a variety of datasets. Through an extensive set of experiments, we also show the ability of our proposed framework in fine-tuning the text summarization model only with a few training samples. To the best of our knowledge, this is first work that studies transfer learning in text summarization and provides a generic solution that works well on unseen data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge