Deep reinforcement learning of event-triggered communication and control for multi-agent cooperative transport

Paper and Code

Mar 29, 2021

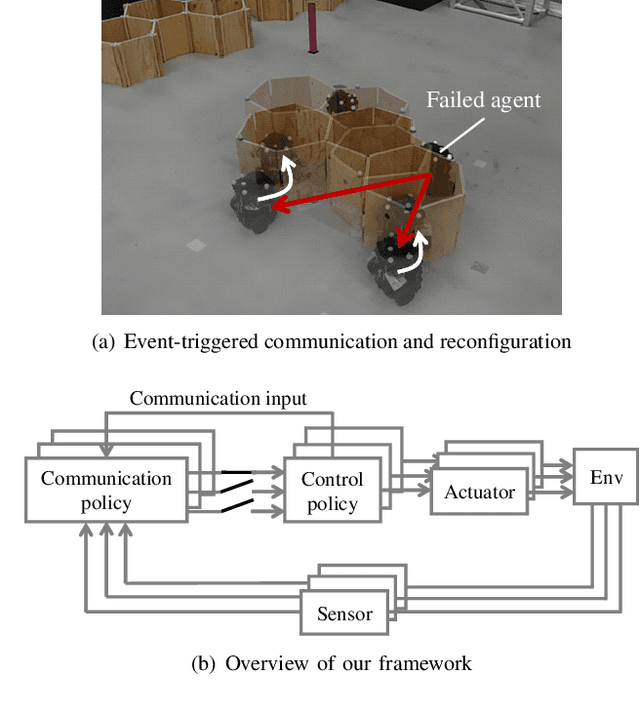

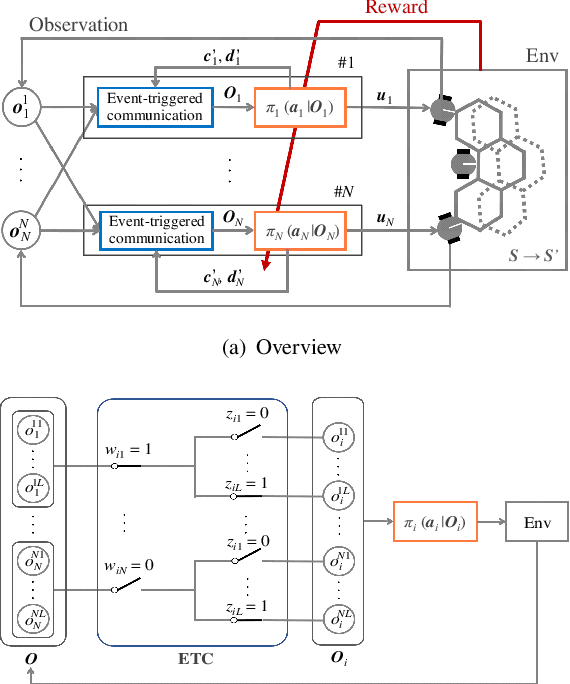

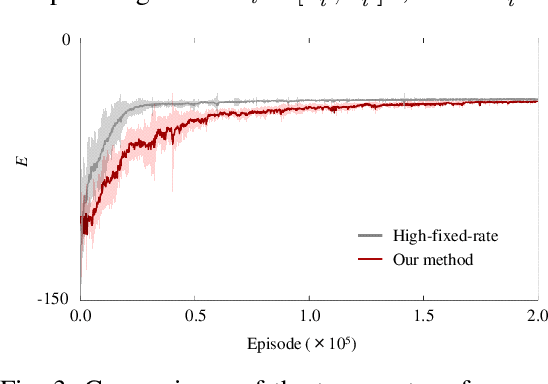

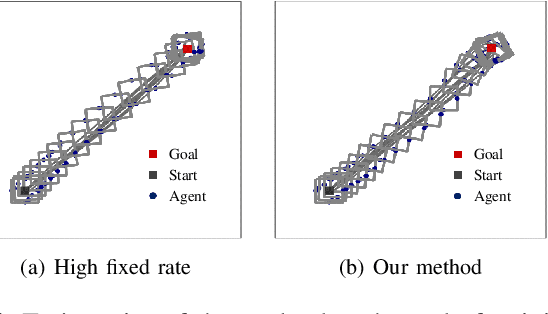

In this paper, we explore a multi-agent reinforcement learning approach to address the design problem of communication and control strategies for multi-agent cooperative transport. Typical end-to-end deep neural network policies may be insufficient for covering communication and control; these methods cannot decide the timing of communication and can only work with fixed-rate communications. Therefore, our framework exploits event-triggered architecture, namely, a feedback controller that computes the communication input and a triggering mechanism that determines when the input has to be updated again. Such event-triggered control policies are efficiently optimized using a multi-agent deep deterministic policy gradient. We confirmed that our approach could balance the transport performance and communication savings through numerical simulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge