Deep Reinforcement Learning for Optimal Power Flow with Renewables Using Spatial-Temporal Graph Information

Paper and Code

Dec 22, 2021

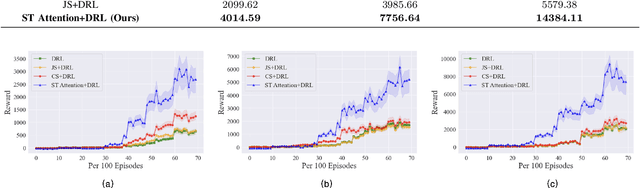

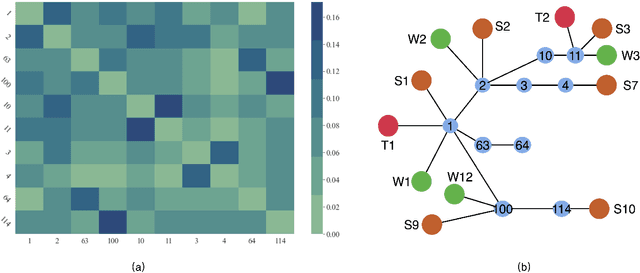

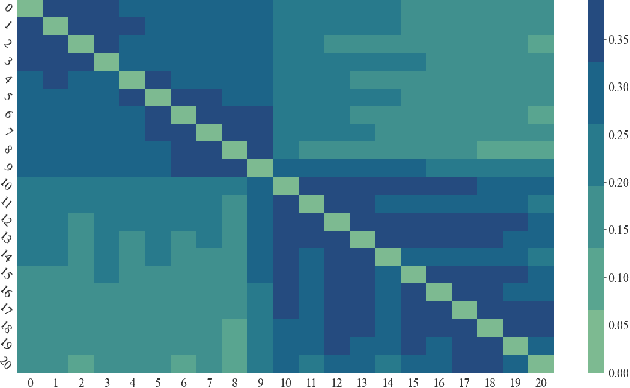

Renewable energy resources (RERs) have been increasingly integrated into modern power systems, especially in large-scale distribution networks (DNs). In this paper, we propose a deep reinforcement learning (DRL)-based approach to dynamically search for the optimal operation point, i.e., optimal power flow (OPF), in DNs with a high uptake of RERs. Considering uncertainties and voltage fluctuation issues caused by RERs, we formulate OPF into a multi-objective optimization (MOO) problem. To solve the MOO problem, we develop a novel DRL algorithm leveraging the graphical information of the distribution network. Specifically, we employ the state-of-the-art DRL algorithm, i.e., deep deterministic policy gradient (DDPG), to learn an optimal strategy for OPF. Since power flow reallocation in the DN is a consecutive process, where nodes are self-correlated and interrelated in temporal and spatial views, to make full use of DNs' graphical information, we develop a multi-grained attention-based spatial-temporal graph convolution network (MG-ASTGCN) for spatial-temporal graph information extraction, preparing for its sequential DDPG. We validate our proposed DRL-based approach in modified IEEE 33, 69, and 118-bus radial distribution systems (RDSs) and show that our DRL-based approach outperforms other benchmark algorithms. Our experimental results also reveal that MG-ASTGCN can significantly accelerate the DDPG training process and improve DDPG's capability in reallocating power flow for OPF. The proposed DRL-based approach also promotes DNs' stability in the presence of node faults, especially for large-scale DNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge