Deep Reinforcement Learning for Decentralized Multi-Robot Exploration with Macro Actions

Paper and Code

Oct 05, 2021

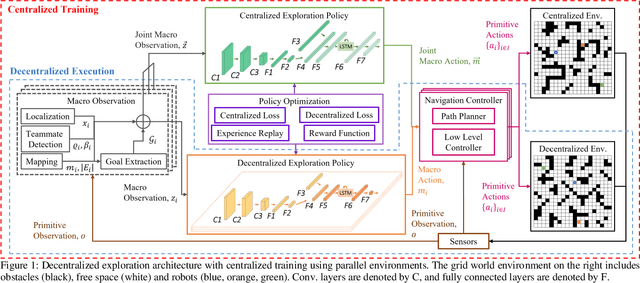

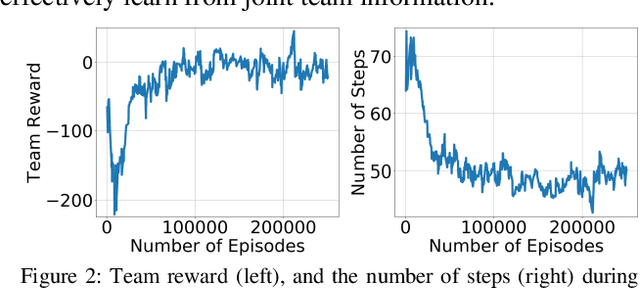

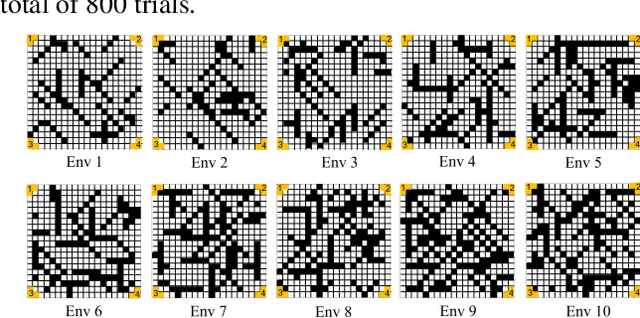

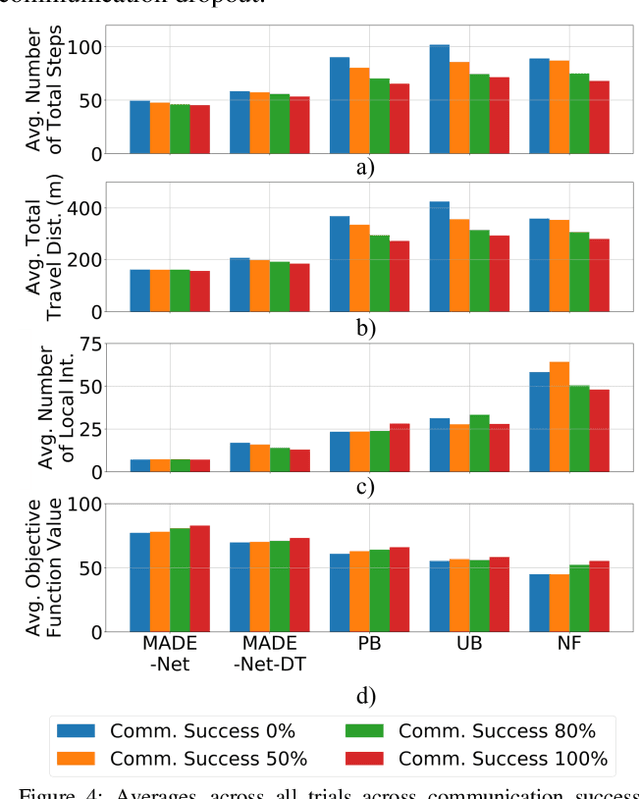

Cooperative multi-robot teams need to be able to explore cluttered and unstructured environments together while dealing with communication challenges. Specifically, during communication dropout, local information about robots can no longer be exchanged to maintain robot team coordination. Therefore, robots need to consider high-level teammate intentions during action selection. In this paper, we present the first Macro Action Decentralized Exploration Network (MADE-Net) using multi-agent deep reinforcement learning to address the challenges of communication dropouts during multi-robot exploration in unseen, unstructured, and cluttered environments. Simulated robot team exploration experiments were conducted and compared to classical and deep reinforcement learning methods. The results showed that our MADE-Net method was able to outperform all benchmark methods in terms of computation time, total travel distance, number of local interactions between robots, and exploration rate across various degrees of communication dropouts; highlighting the effectiveness and robustness of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge