Deep MAGSAC++

Paper and Code

Nov 28, 2021

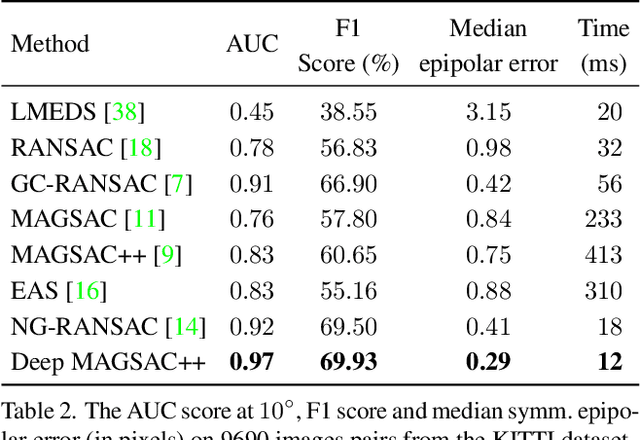

We propose Deep MAGSAC++ combining the advantages of traditional and deep robust estimators. We introduce a novel loss function that exploits the orientation and scale from partially affine covariant features, e.g., SIFT, in a geometrically justifiable manner. The new loss helps in learning higher-order information about the underlying scene geometry. Moreover, we propose a new sampler for RANSAC that always selects the sample with the highest probability of consisting only of inliers. After every unsuccessful iteration, the probabilities are updated in a principled way via a Bayesian approach. The prediction of the deep network is exploited as prior inside the sampler. Benefiting from the new loss, the proposed sampler, and a number of technical advancements, Deep MAGSAC++ is superior to the state-of-the-art both in terms of accuracy and run-time on thousands of image pairs from publicly available datasets for essential and fundamental matrix estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge