Deep Geometric Moment

Paper and Code

May 24, 2022

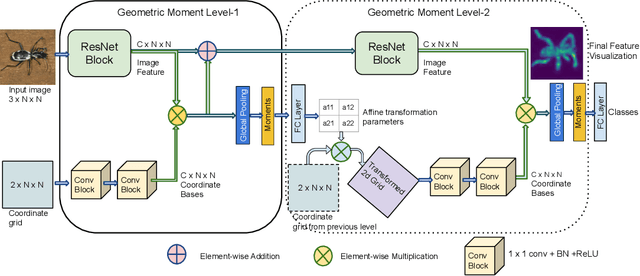

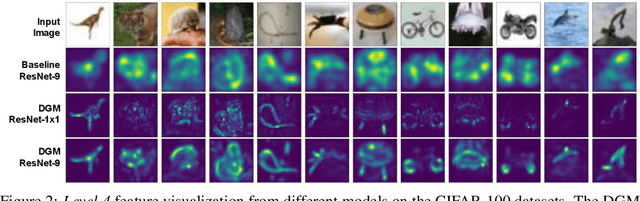

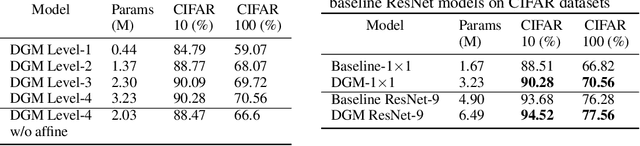

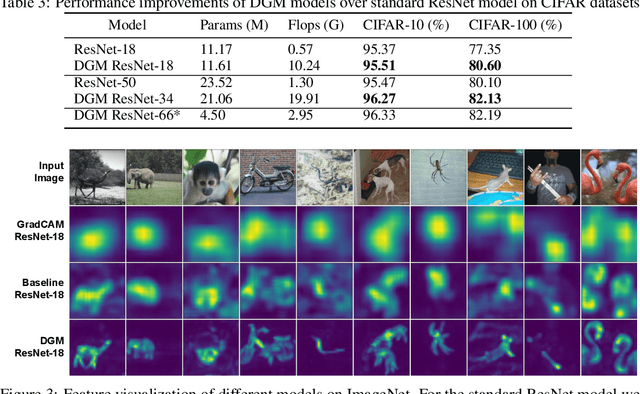

Deep networks for image classification often rely more on texture information than object shape. While efforts have been made to make deep-models shape-aware, it is often difficult to make such models simple, interpretable, or rooted in known mathematical definitions of shape. This paper presents a deep-learning model inspired by geometric moments, a classically well understood approach to measure shape-related properties. The proposed method consists of a trainable network for generating coordinate bases and affine parameters for making the features geometrically invariant, yet in a task-specific manner. The proposed model improves the final feature's interpretation. We demonstrate the effectiveness of our method on standard image classification datasets. The proposed model achieves higher classification performance as compared to the baseline and standard ResNet models while substantially improving interpretability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge