Decision Transformer for Wireless Communications: A New Paradigm of Resource Management

Paper and Code

Apr 08, 2024

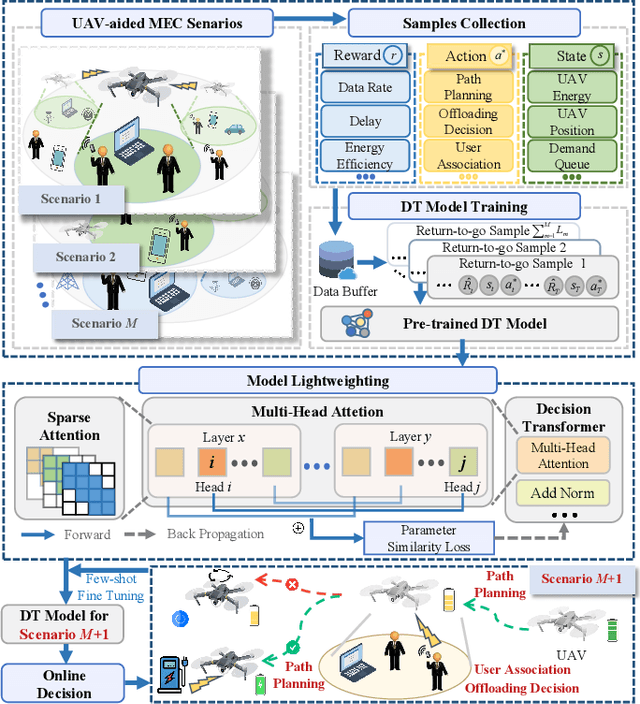

As the next generation of mobile systems evolves, artificial intelligence (AI) is expected to deeply integrate with wireless communications for resource management in variable environments. In particular, deep reinforcement learning (DRL) is an important tool for addressing stochastic optimization issues of resource allocation. However, DRL has to start each new training process from the beginning once the state and action spaces change, causing low sample efficiency and poor generalization ability. Moreover, each DRL training process may take a large number of epochs to converge, which is unacceptable for time-sensitive scenarios. In this paper, we adopt an alternative AI technology, namely, the Decision Transformer (DT), and propose a DT-based adaptive decision architecture for wireless resource management. This architecture innovates through constructing pre-trained models in the cloud and then fine-tuning personalized models at the edges. By leveraging the power of DT models learned over extensive datasets, the proposed architecture is expected to achieve rapid convergence with many fewer training epochs and higher performance in a new context, e.g., similar tasks with different state and action spaces, compared with DRL. We then design DT frameworks for two typical communication scenarios: Intelligent reflecting surfaces-aided communications and unmanned aerial vehicle-aided edge computing. Simulations demonstrate that the proposed DT frameworks achieve over $3$-$6$ times speedup in convergence and better performance relative to the classic DRL method, namely, proximal policy optimization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge