Decentralized Coverage Path Planning with Reinforcement Learning and Dual Guidance

Paper and Code

Oct 14, 2022

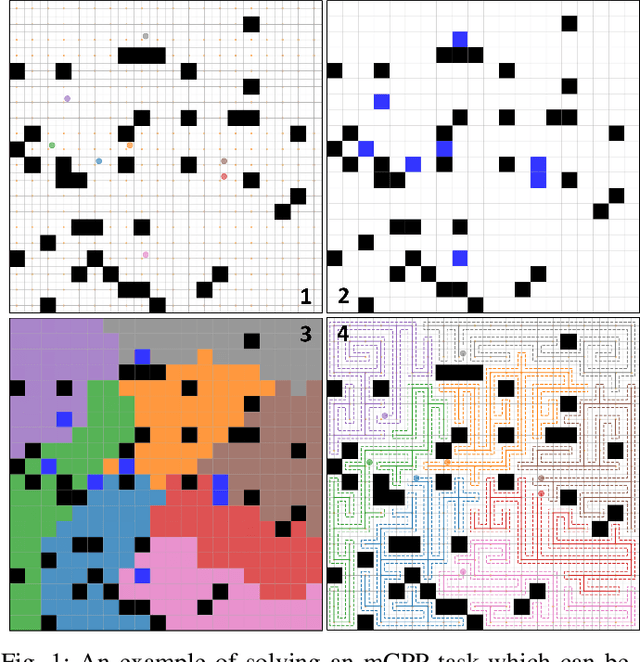

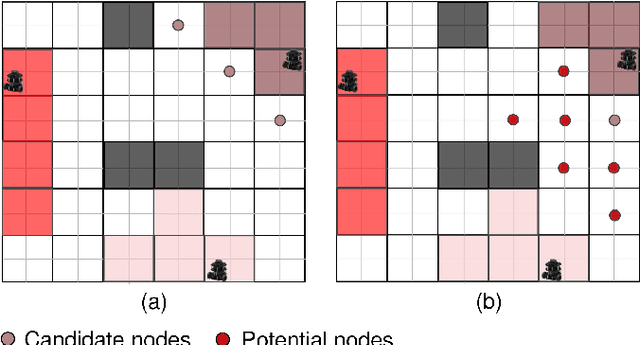

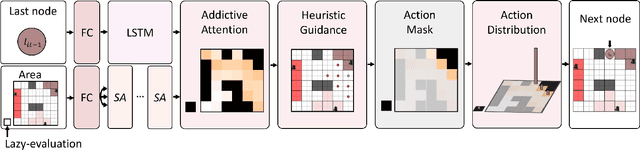

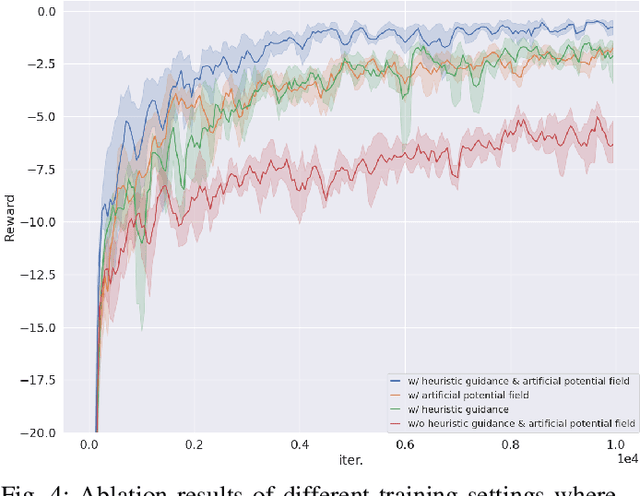

Planning coverage path for multiple robots in a decentralized way enhances robustness to coverage tasks handling uncertain malfunctions. To achieve high efficiency in a distributed manner for each single robot, a comprehensive understanding of both the complicated environments and cooperative agents intent is crucial. Unfortunately, existing works commonly consider only part of these factors, resulting in imbalanced subareas or unnecessary overlaps. To tackle this issue, we introduce a Decentralized reinforcement learning framework with dual guidance to train each agent to solve the decentralized multiple coverage path planning problem straightly through the environment states. As distributed robots require others intentions to perform better coverage efficiency, we utilize two guidance methods, artificial potential fields and heuristic guidance, to include and integrate others intentions into observations for each robot. With our constructed framework, results have shown our agents successfully learn to determine their own subareas while achieving full coverage, balanced subareas and low overlap rates. We then implement spanning tree cover within those subareas to construct actual routes for each robot and complete given coverage tasks. Our performance is also compared with the state of the art decentralized method showing at most 10 percent lower overlap rates while performing high efficiency in similar environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge