DebUnc: Mitigating Hallucinations in Large Language Model Agent Communication with Uncertainty Estimations

Paper and Code

Jul 08, 2024

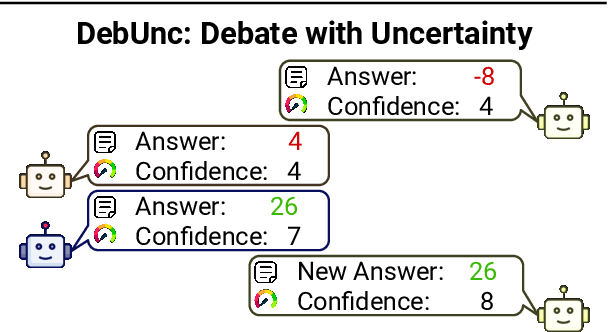

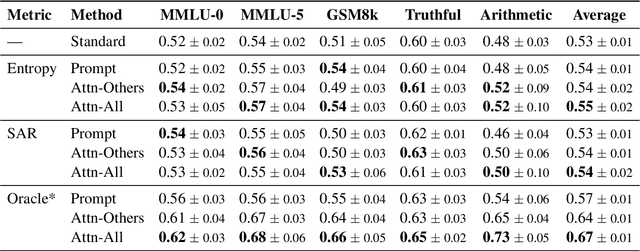

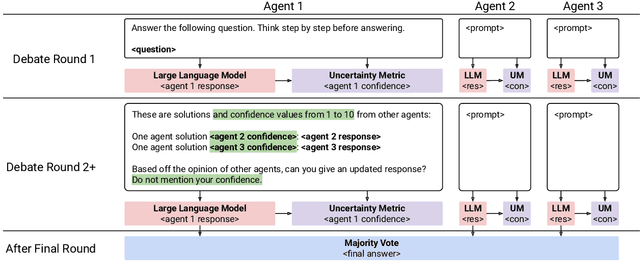

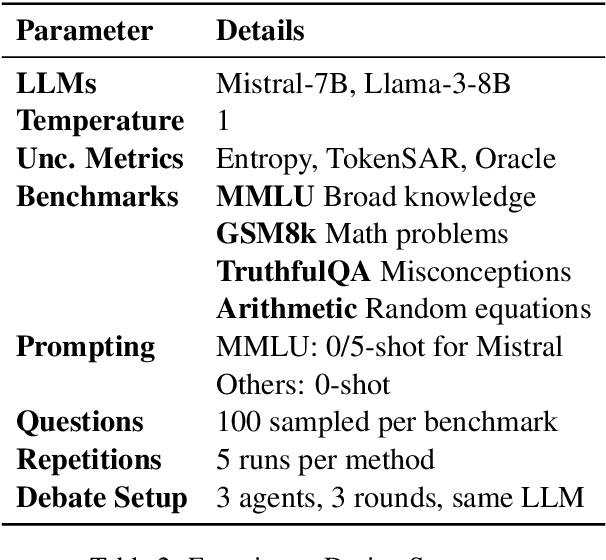

To enhance Large Language Model (LLM) capabilities, multi-agent debates have been introduced, where multiple LLMs discuss solutions to a problem over several rounds of debate. However, LLMs often produce incorrect responses that appear deceptively confident, which can mislead other agents. This is partly because agents do not express their confidence levels during standard debates. To address this, we introduce DebUnc, a multi-agent debate framework that uses uncertainty metrics to assess agent confidence levels. We adapted the LLM attention mechanism to adjust token weights based on confidence levels and also explored using textual prompts to convey confidence. Our evaluations across various benchmarks show that attention-based methods are particularly effective, and that as uncertainty metrics evolve, performance will continue to increase. The code is available at https://github.com/lukeyoffe/debunc

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge