Dataset-Level Attribute Leakage in Collaborative Learning

Paper and Code

Jun 12, 2020

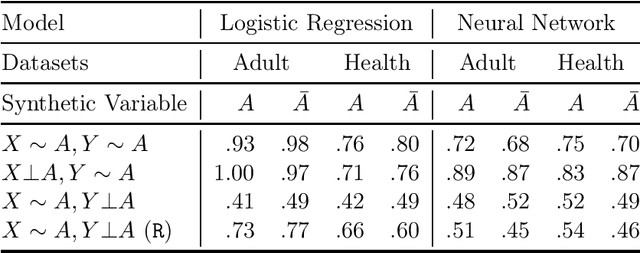

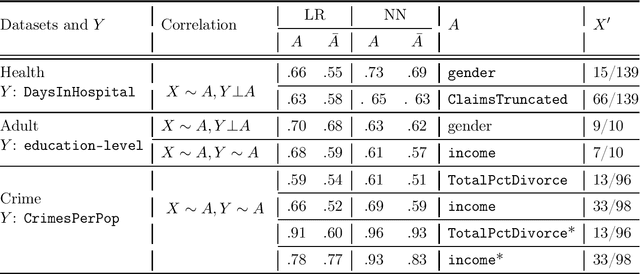

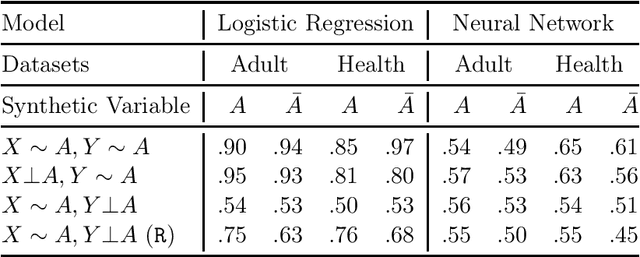

Multi-party machine learning allows several parties to build a joint model to get insights that may not be learnable using only their local data. We consider settings where each party obtains black-box access to the model computed by their mutually agreed-upon algorithm on their joined data. We show that such multi-party computation can cause information leakage between the parties. In particular, a "curious" party can infer the distribution of sensitive attributes in other parties' data with high accuracy. In order to understand and measure the source of leakage, we consider several models of correlation between a sensitive attribute and the rest of the data. Using multiple datasets and machine learning models, we show that leakage occurs even if the sensitive attribute is not included in the training data and has a low correlation with other attributes and the target variable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge