Data Generation Using Pass-phrase-dependent Deep Auto-encoders for Text-Dependent Speaker Verification

Paper and Code

Feb 03, 2021

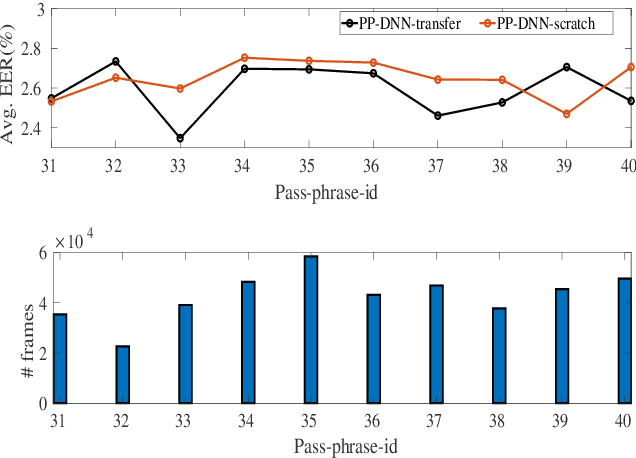

In this paper, we propose a novel method that trains pass-phrase specific deep neural network (PP-DNN) based auto-encoders for creating augmented data for text-dependent speaker verification (TD-SV). Each PP-DNN auto-encoder is trained using the utterances of a particular pass-phrase available in the target enrollment set with two methods: (i) transfer learning and (ii) training from scratch. Next, feature vectors of a given utterance are fed to the PP-DNNs and the output from each PP-DNN at frame-level is considered one new set of generated data. The generated data from each PP-DNN is then used for building a TD-SV system in contrast to the conventional method that considers only the evaluation data available. The proposed approach can be considered as the transformation of data to the pass-phrase specific space using a non-linear transformation learned by each PP-DNN. The method develops several TD-SV systems with the number equal to the number of PP-DNNs separately trained for each pass-phrases for the evaluation. Finally, the scores of the different TD-SV systems are fused for decision making. Experiments are conducted on the RedDots challenge 2016 database for TD-SV using short utterances. Results show that the proposed method improves the performance for both conventional cepstral feature and deep bottleneck feature using both Gaussian mixture model - universal background model (GMM-UBM) and i-vector framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge