Data-Driven and SE-assisted AI Model Signal-Awareness Enhancement and Introspection

Paper and Code

Nov 10, 2021

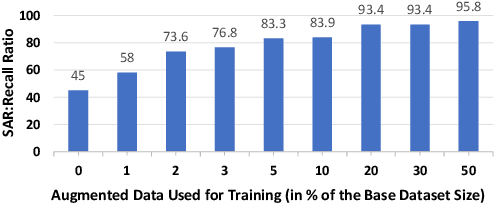

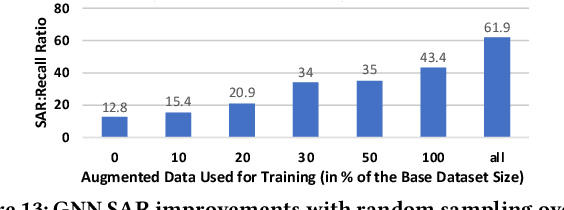

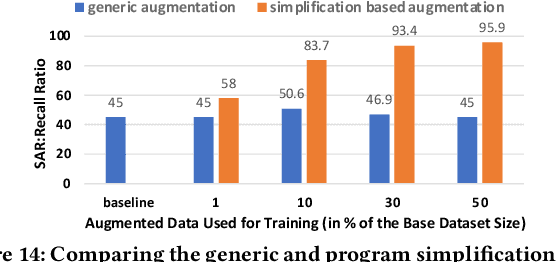

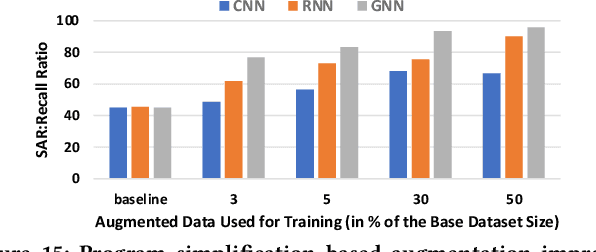

AI modeling for source code understanding tasks has been making significant progress, and is being adopted in production development pipelines. However, reliability concerns, especially whether the models are actually learning task-related aspects of source code, are being raised. While recent model-probing approaches have observed a lack of signal awareness in many AI-for-code models, i.e. models not capturing task-relevant signals, they do not offer solutions to rectify this problem. In this paper, we explore data-driven approaches to enhance models' signal-awareness: 1) we combine the SE concept of code complexity with the AI technique of curriculum learning; 2) we incorporate SE assistance into AI models by customizing Delta Debugging to generate simplified signal-preserving programs, augmenting them to the training dataset. With our techniques, we achieve up to 4.8x improvement in model signal awareness. Using the notion of code complexity, we further present a novel model learning introspection approach from the perspective of the dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge