Data Center Audio/Video Intelligence on Device (DAVID) -- An Edge-AI Platform for Smart-Toys

Paper and Code

Nov 18, 2023

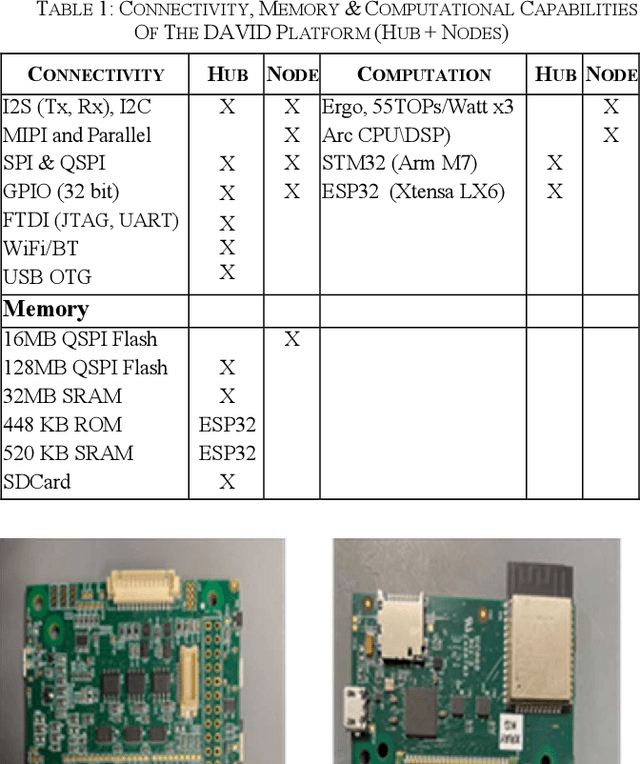

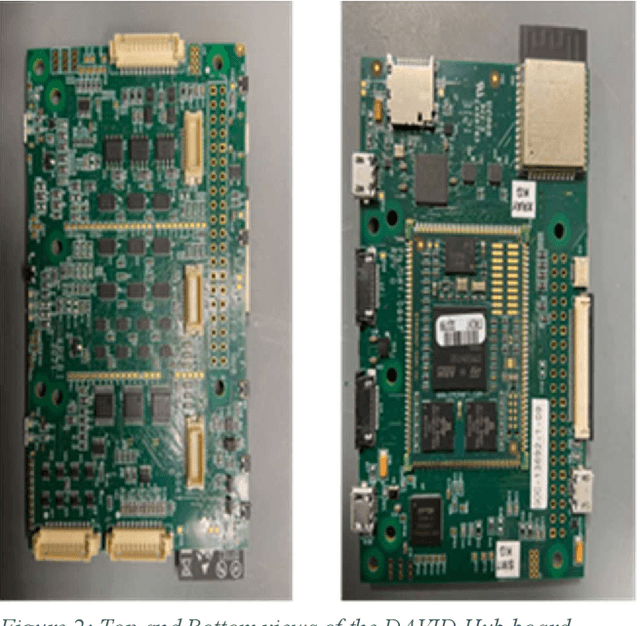

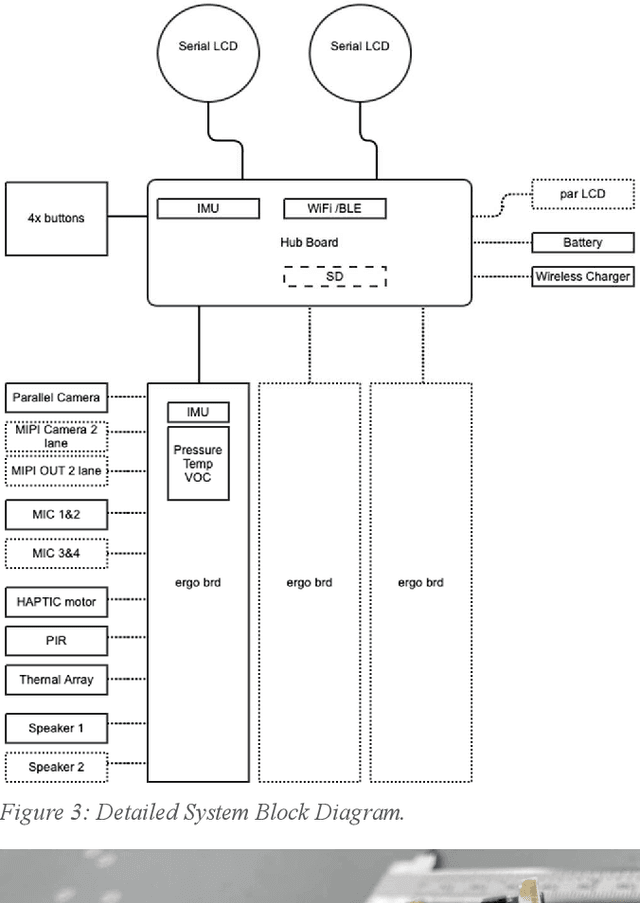

An overview is given of the DAVID Smart-Toy platform, one of the first Edge AI platform designs to incorporate advanced low-power data processing by neural inference models co-located with the relevant image or audio sensors. There is also on-board capability for in-device text-to-speech generation. Two alternative embodiments are presented: a smart Teddy-bear, and a roving dog-like robot. The platform offers a speech-driven user interface and can observe and interpret user actions and facial expressions via its computer vision sensor node. A particular benefit of this design is that no personally identifiable information passes beyond the neural inference nodes thus providing inbuilt compliance with data protection regulations.

* The 12th IEEE Conference on Speech Technology and Human Dialogue

(SpeD 2023) URL: https://sped.pub.ro/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge