DASTSiam: Spatio-Temporal Fusion and Discriminative Augmentation for Improved Siamese Tracking

Paper and Code

Jan 22, 2023

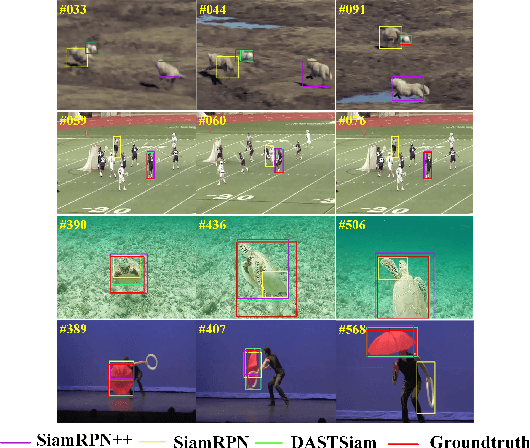

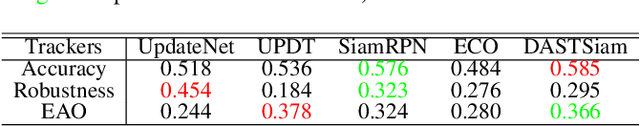

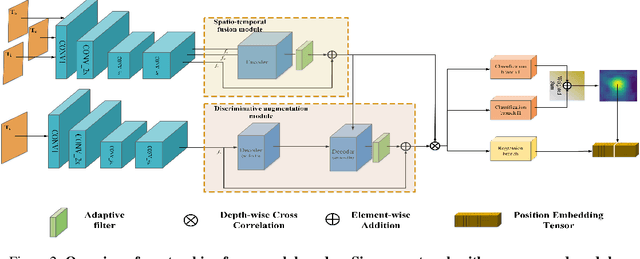

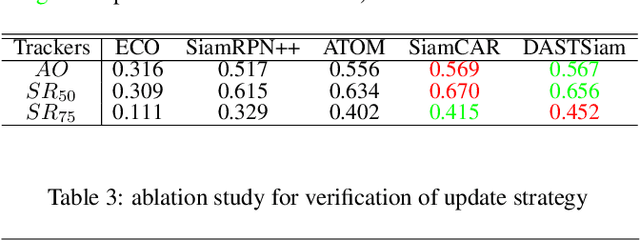

Tracking tasks based on deep neural networks have greatly improved with the emergence of Siamese trackers. However, the appearance of targets often changes during tracking, which can reduce the robustness of the tracker when facing challenges such as aspect ratio change, occlusion, and scale variation. In addition, cluttered backgrounds can lead to multiple high response points in the response map, leading to incorrect target positioning. In this paper, we introduce two transformer-based modules to improve Siamese tracking called DASTSiam: the spatio-temporal (ST) fusion module and the Discriminative Augmentation (DA) module. The ST module uses cross-attention based accumulation of historical cues to improve robustness against object appearance changes, while the DA module associates semantic information between the template and search region to improve target discrimination. Moreover, Modifying the label assignment of anchors also improves the reliability of the object location. Our modules can be used with all Siamese trackers and show improved performance on several public datasets through comparative and ablation experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge