DAGAM: A Domain Adversarial Graph Attention Model for Subject Independent EEG-Based Emotion Recognition

Paper and Code

Feb 27, 2022

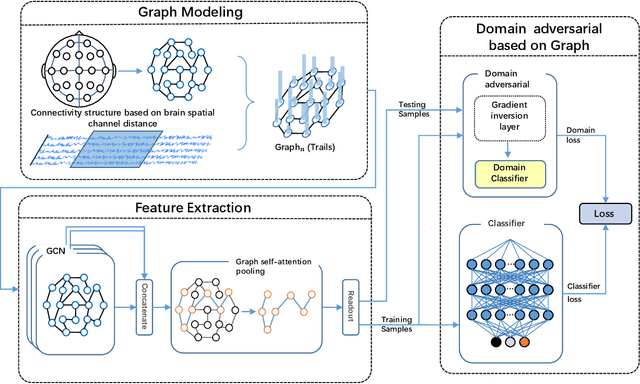

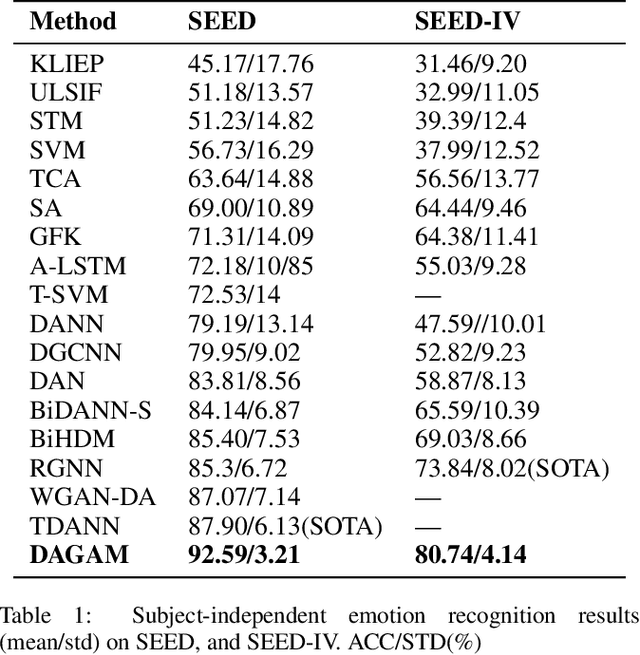

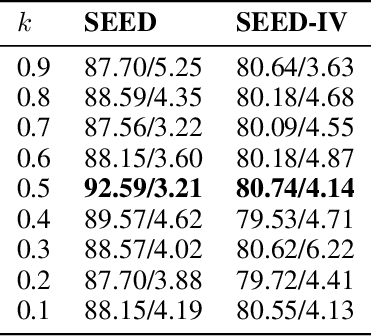

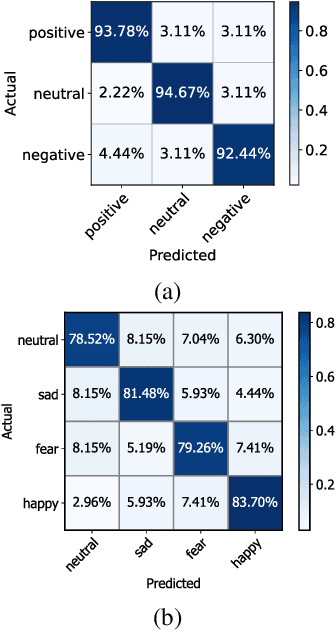

One of the most significant challenges of EEG-based emotion recognition is the cross-subject EEG variations, leading to poor performance and generalizability. This paper proposes a novel EEG-based emotion recognition model called the domain adversarial graph attention model (DAGAM). The basic idea is to generate a graph to model multichannel EEG signals using biological topology. Graph theory can topologically describe and analyze relationships and mutual dependency between channels of EEG. Then, unlike other graph convolutional networks, self-attention pooling is applied to benefit salient EEG feature extraction from the graph, which effectively improves the performance. Finally, after graph pooling, the domain adversarial based on the graph is employed to identify and handle EEG variation across subjects, efficiently reaching good generalizability. We conduct extensive evaluations on two benchmark datasets (SEED and SEED IV) and obtain state-of-the-art results in subject-independent emotion recognition. Our model boosts the SEED accuracy to 92.59% (4.69% improvement) with the lowest standard deviation of 3.21% (2.92% decrements) and SEED IV accuracy to 80.74% (6.90% improvement) with the lowest standard deviation of 4.14% (3.88% decrements) respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge