CycleTrans: Learning Neutral yet Discriminative Features for Visible-Infrared Person Re-Identification

Paper and Code

Aug 21, 2022

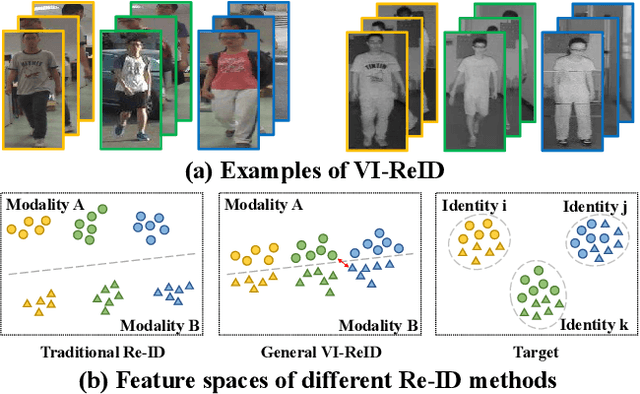

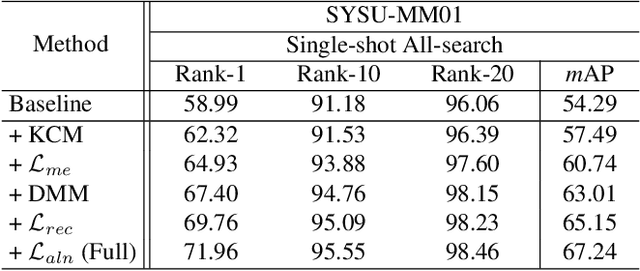

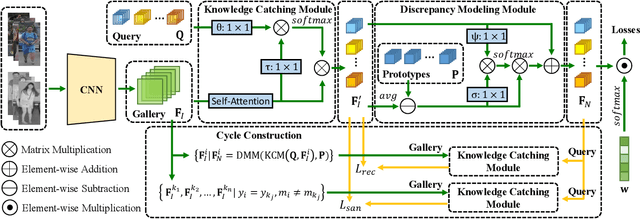

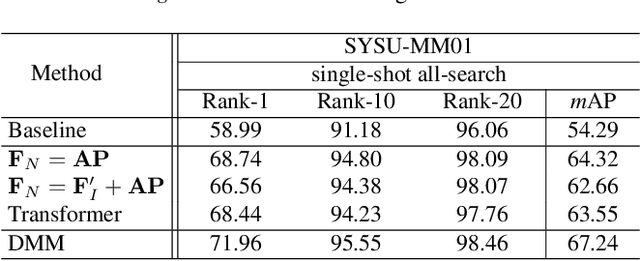

Visible-infrared person re-identification (VI-ReID) is a task of matching the same individuals across the visible and infrared modalities. Its main challenge lies in the modality gap caused by cameras operating on different spectra. Existing VI-ReID methods mainly focus on learning general features across modalities, often at the expense of feature discriminability. To address this issue, we present a novel cycle-construction-based network for neutral yet discriminative feature learning, termed CycleTrans. Specifically, CycleTrans uses a lightweight Knowledge Capturing Module (KCM) to capture rich semantics from the modality-relevant feature maps according to pseudo queries. Afterwards, a Discrepancy Modeling Module (DMM) is deployed to transform these features into neutral ones according to the modality-irrelevant prototypes. To ensure feature discriminability, another two KCMs are further deployed for feature cycle constructions. With cycle construction, our method can learn effective neutral features for visible and infrared images while preserving their salient semantics. Extensive experiments on SYSU-MM01 and RegDB datasets validate the merits of CycleTrans against a flurry of state-of-the-art methods, +4.57% on rank-1 in SYSU-MM01 and +2.2% on rank-1 in RegDB.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge